Bash expansion techniques for a more efficient workflow

For any project, you need a quick and efficient way to wrangle your files. If you use Unix, Bash and Zsh are powerful tools to help achieve this.

I recently needed to rename a file so that all its underscores were replaced with dash characters, to match the convention of the project. I could do this manually pretty quickly, but I knew there was a bash built-in one-liner waiting to be discovered, so I went down the rabbit hole to learn about Bash’s shell expansions and history expansion. See the “history expansion” section for how I solved the underscore/dash issue.

Bash has seven types of expansion:

- brace expansion

- tilde expansion

- parameter and variable expansion

- command substitution

- arithmetic expansion

- word splitting

- filename expansion

The documentation is good and concise for each of these, so rather than try to recreate it, I’ll go over examples of how I use some of them.

Shell parameter expansion

Example: batch converting images to WebP

I use parameter expansion frequently while maintaining this blog. We serve images in WebP format, so I generally loop over all the JPEGs and/or PNGs (after cropping and/or scaling) and convert them using cwebp:

for f …linux shell tips

Creating API for Invoice Generator Using C# and Minimal APIs

In this blog post, we will explore how to create a robust invoice generator API using .NET 9 and minimal APIs, with a focus on integrating it with an existing Vue frontend whose implementation we covered in a previous blog post.

We will use the minimal APIs framework to create a RESTful API that can be easily used by our Vue application. Minimal APIs require less boilerplate code and configuration compared to traditional controller-based approaches. It is suited for smaller APIs, microservices, or serverless functions. You can learn more about choosing between controller-based APIs and minimal APIs here.

Prerequisites

Before going further into the code, we should make sure to have the following tools and technologies installed:

- .NET 9 (the latest version of the .NET runtime)

Additionally, we already have an existing Vue frontend implementation that lacks API connectivity from our previous post. We will guide you through the process of integrating the Invoice Generator API with Vue application.

Setting up the Project

We will use the dotnet command to create a new .NET 9 project and set up the minimal APIs framework using the steps below:

- Create a new .NET Core Web API project …

csharp database javascript frameworks programming

Vector Search: The Future of Finding What Matters

Photo by Ann H on Pexels

In a world flooding with data in several different formats like images, documents, text, and videos, traditional search methods are starting to not be modern anymore. Today, the vector search technique is revolutionizing how we retrieve and understand information. If you wonder how Spotify can recommend the perfect song or how Google can find almost perfectly accurate image matches, vector search is kind of the wizard behind the curtain. Let’s see how it has become a game changer.

What Is Vector Search?

At its core, vector search is a method of finding similar items in a dataset by representing them as vectors — essentially, lists of numbers in a multi-dimensional space. Unlike keyword-based search, which relies on exact matches or predefined rules, vector search focuses on semantic similarity. This means it can understand the meaning or context behind data, not just the words or pixels on the surface.

Imagine you’re searching for a cozy cabin in the woods. A traditional search might get stuck on the exact words in this query, missing a listing for something similar like a snug retreat nestled in a forest. Vector search, however, can connect the dots …

artificial-intelligence machine-learning search

When to DIY vs Hire for Ecommerce Development

A Practical Guide for Business Owners

Many ecommerce businesses start with a scrappy DIY mindset. And that works — until it doesn’t. Knowing when to build it yourself and when to bring in a professional can mean the difference between momentum and mess.

We’ve worked with business owners who built their first site in a weekend — and others who sunk $50,000 into a platform they barely used. Here’s how to figure out which path fits your goals, skills, and budget.

When to DIY (With Confidence)

There are plenty of situations where handling development yourself makes good business sense:

- You’re on a tight budget and need proof-of-concept

- You’re using simple, user-friendly tools like Shopify, Wix, or Squarespace

- You just need a basic store or landing page

- You’re comfortable with tech and enjoy learning new tools

Helpful DIY Tools

- Shopify

- Wordpress / WooCommerce (basic setup)

- Webflow

- Canva (for design)

- Zapier (for automation)

Watch Out For

- Mobile layout issues that won’t go away

- Slow site speeds or broken checkout processes

- Inventory or shipping errors you can’t fix

When the fixes start taking more time than they’re worth — or cost you sales — it’s time to …

ecommerce

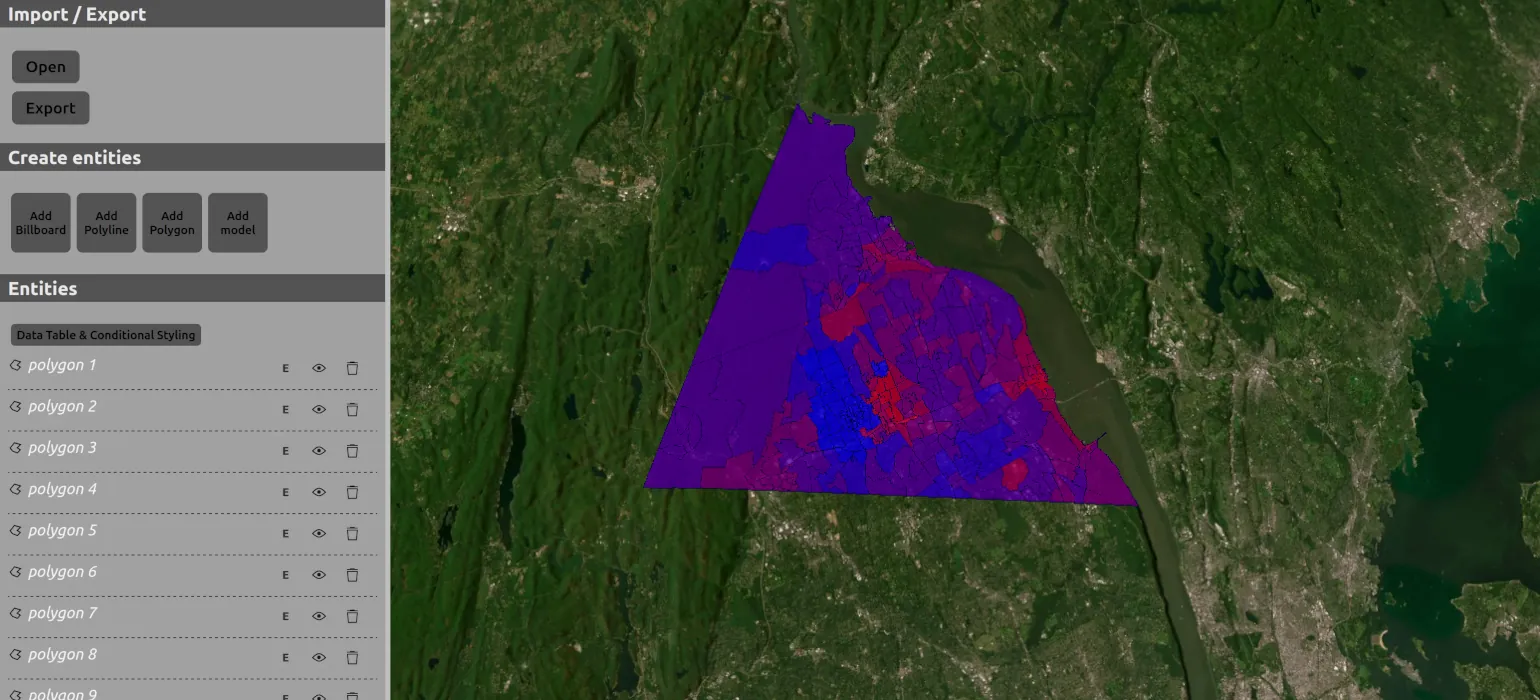

New Cesium KML-CZML Editor Features: Custom Data & Styling, Google 3D Tiles, and More

I have made some updates to the Cesium KML-CZML editor I created and maintain.

The most important additions and changes are:

- Support for Google 3D tiles

- Support for writing many more features, including interpolation and time series data for some properties. There are still no editing capabilities for these properties, but while previously the editor would strip these values from the data, it will now copy them into the output file.

- Export to KML and KMZ

- Support for custom data and styling using that custom data

- Switched frontend framework from Vue to React

Adding support for Google 3D tiles is what caused me to create this major version update. In a nutshell, Cesium has its own way of adding reactivity to Entities and Vue doesn’t always play nice with it. If I add Google 3D tiles to the scene, it looks like that Cesium Entities have some references to the scene and that causes Vue to apply reactive getters and setters to the whole scene.

So, I’ve switched to using React because it’s easier to control which parts should be reactive, as well as when and how you update Cesium Entities and UI components.

The next important piece is the CZML exporter. The main …

cesium google-earth gis open-source visionport kml

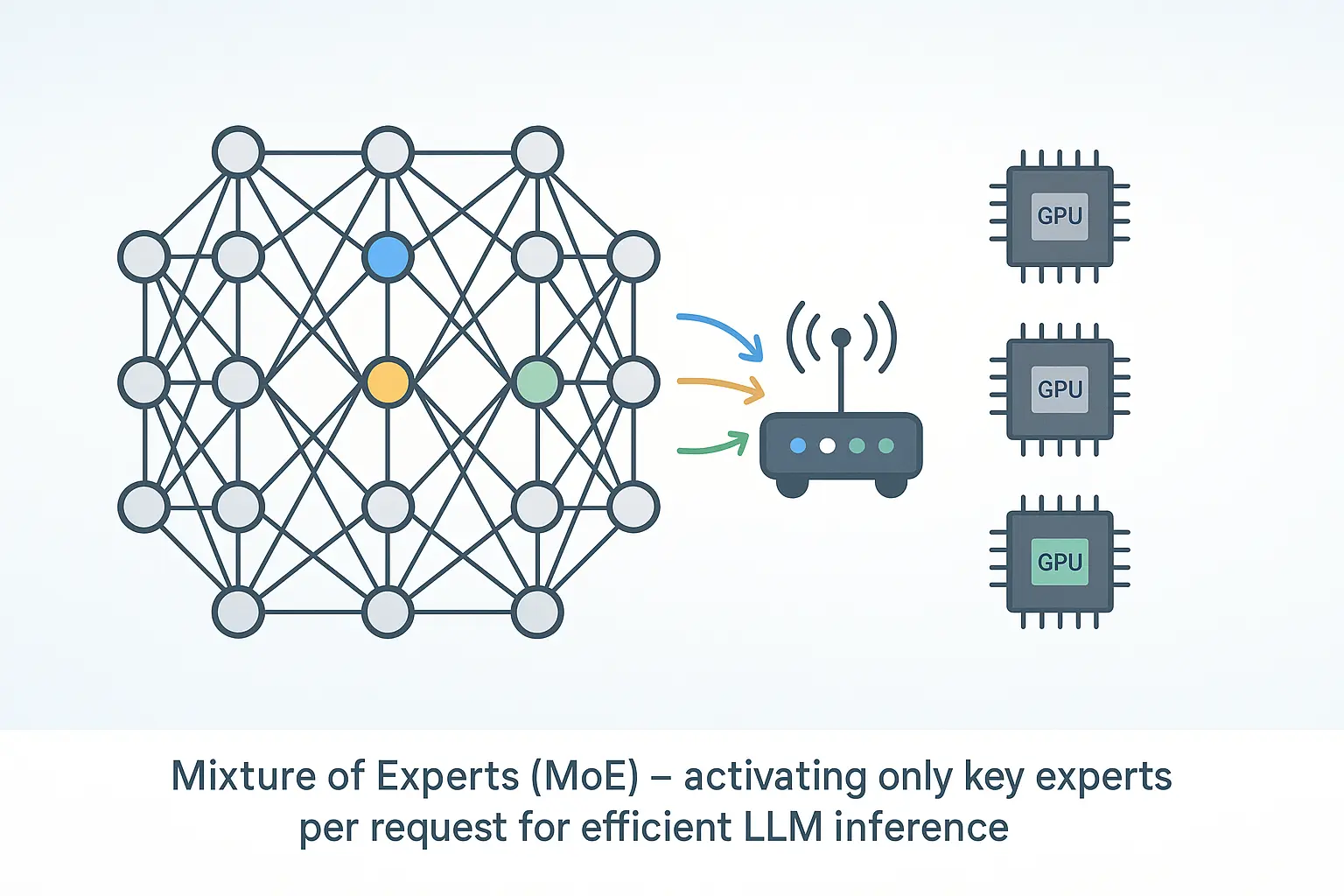

Deploying LLMs Efficiently with Mixture of Experts

1. Why MoE?

Modern language models can have hundreds of billions of parameters. That power comes with a cost: high latency, high memory, and high energy use. Mixture‑of‑Experts (MoE) tackles the problem by letting only a few specialised sub‑networks run for each token, cutting compute while keeping quality.

In this post you’ll get:

- A short intro to MoE

- A simple diagram that shows how it works

- A look at Open‑Source MoE Models

- A quick guide to running one on your own machine with Docker + Ollama

- Deployment tips and extra resources

2. Key Ideas

| Term | Quick meaning |

|---|---|

| Dense model | Every weight is used for every token. |

| Expert | A feed‑forward network inside the layer. |

| Router | Tiny layer that scores experts for each token. |

| MoE layer | Router + experts; only the top‑k experts run. |

| Sparse activation | Most weights sleep for most tokens. |

Analogy: Think of triage in a hospital. The nurse (router) sends you to the right specialist (expert) instead of paging every doctor.

3. How a Token Moves Through an MoE Layer

Input Token

│

▼

┌────────┐

│ Router │ (scores all experts)

└────────┘

│ selects top‑k …artificial-intelligence

Implementing Azure Blob Storage in .NET 9

Businesses keep moving toward scalable and cloud-based architectures. With this in mind, a client that was dealing with random errors in a .NET app when saving files locally on the web server decided to get rid of that process and replace it with an Azure Blob Storage implementation.

Why use Azure Blob Storage? It’s an efficient cloud object storage solution from Microsoft, designed to store unstructured data, optimized for storing and serving documents, media, logs, or binary data, especially in applications that expose this data through an API. The key features are high performance, redundancy, reliability, and scalability. There’s an SDK that we can use for easy integration and development, be it in .NET or other languages.

Let’s take a look at what that change involves. For this example, we will set up the integration in a .NET 9 application:

Install the NuGet package required to connect to Azure Blob Storage. We can do it with the dotnet CLI, or through the NuGet package manager.

dotnet add package Azure.Storage.Blobs

Then, we need to configure our connection in our appsettings.json file. We will use the connection string that Azure provides us when we …

dotnet cloud storage

Rebuilding a Modern App in Rails 7 Without JavaScript Frameworks

In the realm of web development, the allure of JavaScript frameworks like React and Vue is undeniable. However, I recently embarked on a mission to rebuild a modern web application using Rails 7, Hotwire, and Turbo, deliberately avoiding any JavaScript frameworks. The outcome was a streamlined stack, improved performance, and a more maintainable codebase.

Why Consider a Framework-Free Approach in 2025?

The complexity introduced by modern JavaScript frameworks can sometimes overshadow their benefits. Managing dependencies, build tools, and the intricacies of client-side rendering often lead to increased development overhead. With the advancements in Rails 7, particularly the introduction of Hotwire and Turbo, it’s now feasible to build dynamic, responsive applications without the need for additional JavaScript frameworks.

The Application: A Simplified Project Management Tool

I had previously built an app that was esentially a simplified version of Trello. The app is a lightweight project management tool featuring:

-

Boards and cards

-

Drag-and-drop functionality

-

Real-time updates

-

Commenting system

-

User authentication and role management

Originally built with a React …

rails javascript