Intro to DimpleJS, Graphing in 6 Easy Steps

Data Visualization is a big topic these days given the giant amount of data being collected. So, graphing this data has been important in order to easily understand what has been collected. Of course there are many tools available for this purpose but one of the more popular choices is D3.

D3 is very versatile but can be a little more complicated than necessary for simple graphing. So, what if you just want to quickly spin up some graphs quickly? I was recently working on a project where we were trying to do just that. That is when I was introduced to DimpleJS.

The advantage of using DimpleJS rather than plain D3 is speed. It allows you to quickly create customizable graphs with your data, gives you easy access to D3 objects, is intuitive to code, and I’ve found the creator John Kiernander to be very responsive on Stack Overflow when I ran in to issues.

I was really impressed with how flexible DimpleJS is. You can make a very large variety of graphs quickly and easily. You can update the labels on the graph and on the axes, you can create your own tooltips, add colors and animations, etc..

I thought I’d make a quick example to show just how easy it is to start graphing.

Step 1 …

visualization javascript

DevOpsDays India — 2015

DevOpsIndia 2015 was held at The Royal Orchid in Bengaluru on Sep 12-13, 2015. After saying hello to a few familiar faces who I often see at the conferences, I collected some goodies and entered into the hall. Everything was set up for the talks. Niranjan Paranjape, one of the organizers, was giving the introduction and overview of the conference.

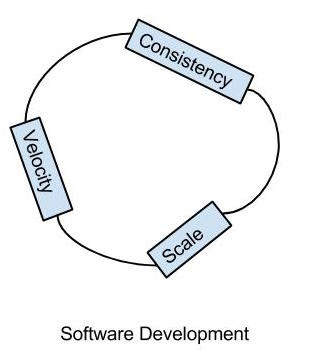

Justin Arbuckle from Chef gave a wonderful keynote talk about the “Hedgehog Concept” and spoke more about the importance of consistency, scale and velocity in software development.

In addition, he quoted “A small team with generalists who have a specialization, deliver far more than a large team of single skilled people.”

A talk on “DevOps of Big Data infrastructure at scale” was given by Rajat Venkatesh from Qubole. He explained the architecture of Qubole Data Service (QDS), which helps to autoscale the Hadoop cluster. In short, scale up happens based on the data from Hadoop Job Tracker about the number of jobs running and time to complete the jobs. Scale down will be done by decommissioning the node, and the server will be chosen by which is reaching the boundary of an hour. This is because most of the cloud service providers charge …

automation conference devops containers docker

Pgbouncer user and database pool_mode with Scaleway

The recent release of PgBouncer 1.6, a connection pooler for Postgres, brought a number of new features. The two I want to demonstrate today are the per-database and per-use pool_modes. To get this effect previously, one had to run separate instances of PgBouncer. As we shall see, a single instance can now run different pool_modes seamlessly.

There are three pool modes available in PgBouncer, representing how aggressive the pooling becomes: session mode, transaction mode, and statement mode.

Session pool mode is the default, and simply allows you to avoid the startup costs for new connections. PgBouncer connects to Postgres, keeps the connection open, and hands it off to clients connecting to PgBouncer. This handoff is faster than connecting to Postgres itself, as no new backends need to be spawned. However, it offers no other benefits, and many clients/applications already do their own connection pooling, making this the least useful pool mode.

Transaction pool mode is probably the most useful one. It works by keeping a client attached to the same Postgres backend for the duration of a transaction only. In this way, many clients can share the same …

cloud database postgres scalability

YAPC::NA 2015 Conference Report

In June, I attended the Yet Another Perl Conference (North America), held in Salt Lake City, Utah. I was able to take in a training day on Moose, as well as the full 3-day conference.

The Moose Master Class (slides and exercises here) was taught by Dave Rolsky (a Moose core developer), and was a full day of hands-on training and exercises in the Moose object-oriented system for Perl 5. I’ve been experimenting a bit this year with the related project Moo (essentially the best two-thirds of Moose, with quicker startup), and most of the concepts carry over, with just slight differences.

Moose and Moo allow the modern Perl developer to quickly write OO Perl code, saving quite a bit of work from the older “classic” methods of writing OO Perl. Some of the highlights of the Moose class include:

- Sub-classing is discouraged; this is better done using Roles

- Moose eliminates more typing; more typing can often equal more bugs

- Using namespace::autoclean at the top is a best practice, as it cleans up after Moose

- Roles are what a class does, not what it is. Roles add functionality.

- Use types with MooseX::Types or Type::Tiny (for Moo)

- Attributes can be objects (see slide 231)

Additional helpful …

conference perl

Install Tested Packages on Production Server

One of our customers has us to do scheduled monthly OS updates following a specific rollout process. First week of the month, we will update the test server and wait for a week to confirm that everything looks as expected in the application; then next week we apply the very same updates to the production servers.

Since not long ago we used to use aptitude to perform system updates. While doing the update on the test server, we also executed aptitude on production servers to “freeze” the same packages and version to be updated on following week. That helped to ensure that only tested packages would have been updated on the production servers afterward.

Since using aptitude in that way wasn’t particularly efficient, we decided to use directly apt-get to stick with our standard server update process. We still wanted to keep our test-production synced updated process cause software updates released between the test and the production server update are untested in the customer specific environment. Thus we needed to find a method to filter out the unneeded packages for the production server update.

In order to do so we have developed a shell script that automates the process and …

automation linux sysadmin ubuntu update

Memcache Full HTML in Ruby on Rails with Nginx

Hi! Steph here, former long-time End Point employee now blogging from afar as a software developer for Pinhole Press. While I’m no longer an employee of End Point, I’m happy to blog and share here.

I recently went down the rabbit hole of figuring out how to cache full HTML pages in memcached and serve those pages via nginx in a Ruby on Rails application, skipping Rails entirely. While troubleshooting this, I could not find much on Google or StackOverflow, except related articles for applying this technique in WordPress.

Here are the steps I went through to get this working:

Replace Page Caching

First, I cloned the page caching gem repository that was taken out of the Rails core on the move from Rails 3 to 4. It writes fully cached pages out to the file system. It can easily be added as a gem to any project, but the decision was made to remove it from the core.

Because of the complexities in cache invalidation across file systems on multiple instances (with load balancing), and the desire to skip a shared/mounted file server, and because the Rails application relies on memcached for standard Rails fragment and view caching throughout the site, the approach was to use memcached for …

performance rails

Liquid Galaxy and the Coral Reefs of London

Exploring coral reefs and studying the diverse species that live in them usually requires some travel. Most of the coral in the world lives in the Indo-Pacific region that includes the Red Sea, Indian Ocean, Southeast Asia and the Pacific. The next largest concentration of reefs is in the Caribbean and Atlantic. Oh, and then there is London.

London, you say?

Yes, the one in England. No, not the coral attaching itself to oil and gas platforms in the North Sea, nor the deep water coral there—admittedly far away from London, but perhaps in the general vacinity on the globe. We’re talking about the heart of London, specifically Cromwell Road. Divers will need to navigate their ways there, but scuba gear and a boat won’t be required once they arrive. No worries! Divers can float right up to the Liquid Galaxy in the exhibit hall at The Natural History Museum. The museum opened CORAL REEFS: SECRET CITIES OF THE SEA on March 27th. and the exhibit runs through mid-September this year.

Actually, there are 3 Liquid Galaxies at the Natural History Museum in London to allow a maximum amount of exploration and a minimum of waiting in queue.

Last year, the Natural History Museum engaged End …

event visionport

Old Dog & New Tricks — Giving Interchange a New Look with Bootstrap

Introduction

So your Interchange based website looks like it walked out of a disco… but you spent a fortune getting the back end working with all those custom applications…. what to do?

Interchange is currently being used in numerous very complex implementations. Trying to adapt another platform to replace Interchange is a formidable task, and in many cases the “problem” that users are trying to “solve” by replacing Interchange, can be remedied by a relatively simple face lift to the front end. One of the main attractions to Interchange in the past was its ability to scale from a small mom & pop eCommerce application, to a mid level support system for a larger company and its related back end systems. Once the connection to those back end systems has been created, and for as long as you use those related systems, Interchange will continue to be the most economic choice for the job. But that leaves the front end, the one that your customers see and use (with their phones and tablets) that becomes the most immediate target for “modernization”.

Granted, there are new and alternate ways of accessing and presenting data and views to users, but many of those …

css design html interchange javascript