Safari 4 Top Sites feature skews analytics

Safari version 4 has a new “Top Sites” feature that shows thumbnail images of the sites the user most frequently visits (or, until enough history is collected, just generally popular sites).

Martin Sutherland describes this feature in details and shows how to detect these requests, which set the X-Purpose HTTP header to “preview”.

The reason this matters is that Safari uses its normal browsing engine to fetch not just the HTML, but all embedded JavaScript and images, and runs in-page client JavaScript code. And these preview thumbnails are refreshed fairly frequently—possibly several times per day per user.

Thus every preview request looks just like a regular user visit, and this skews analytics which see a much higher than average number of views from Safari 4 users, with lower time-on-site averages and higher bounce rates since no subsequent visits are registered (at least as part of the preview function).

The solution is to simply not output any analytics code when the X-Purpose header is set to “preview”. In Interchange this is easily done if you have an include file for your analytics code, by wrapping the file with an [if] block such as this:

[tmp x_purpose][env …analytics browsers django ecommerce interchange php rails

MySQL Ruby Gem CentOS RHEL 5 Installation Error Troubleshooting

Building and installing the Ruby mysql gem on freshly-installed Red Hat based systems sometimes produces the frustratingly ambiguous error below:

# gem install mysql

/usr/bin/ruby extconf.rb

checking for mysql_ssl_set()... no

checking for rb_str_set_len()... no

checking for rb_thread_start_timer()... no

checking for mysql.h... no

checking for mysql/mysql.h... no

*** extconf.rb failed ***

Could not create Makefile due to some reason, probably lack of

necessary libraries and/or headers. Check the mkmf.log file for more

details. You may need configuration options.Searching the web for info on this error yields two basic solutions:

- Install the mysql-devel package (this provides the mysql.h file in /usr/include/mysql/).

- Run gem install mysql – –with-mysql-config=/usr/bin/mysql_config or some other additional options.

These are correct but not sufficient. Because this gem compiles a library to interface with MySQL’s C API, the gcc and make packages are also required to create the build environment:

# yum install mysql-devel gcc make

# gem install mysql -- --with-mysql-config=/usr/bin/mysql_configAlternatively, if you’re using your distro’s ruby (not a custom build …

database hosting mysql redhat rails

On Linux, noatime includes nodiratime

Note to performance-tweaking Linux sysadmins, pointed out to me by Selena Deckelmann: On Linux, the filesystem attribute noatime includes nodiratime, so there’s no need to say both “noatime,nodiratime” as I once did. (See this article on atime for details if you’re not familiar with it.)

Apparently the nodiratime attribute was added later as a subset of noatime applying only to directories to still offer a bit of performance boost in situations where noatime on files would cause trouble (as with mutt and a few other applications that care about atimes).

See also the related newer relatime attribute in the mount(8) manpage.

hosting optimization tips

Monday Reading Material

Just a few links from the past week that are worth checking out:

-

“Spices: the internet of the ancient world!” — Planet Money Podcast. Great storytelling about the ancient spice trade and how information about where certain spices came from eventually leaked out and popped the spice trading bubble/monopoly.

-

Enterprise software is entirely bereft of soul. Reading this reminded me of antifeatures and the competitive advantages of open source software.

-

Emulating Empathy. Nice summary of how interacting with users of software (customers) on a non-technical issues, or over high-level requirements, provokes creativity. Also, that good customer communication is a skill not an innate talent—meaning it can be taught and learned. :)

-

Interaxon. Other than the cute name, this is a fascinating company and concept based in Vancouver, BC. Thought controlled computing! Looking forward to seeing what comes out of their “Bright Ideas” exhibit during the Winter Olympics.

tips

PostgreSQL version 9.0 release date prediction

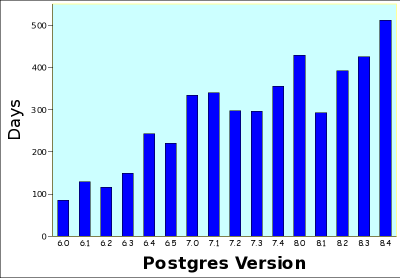

So when will PostgreSQL version 9.0 come out? I decided to “run the numbers” and take a look at how the Postgres project has done historically. Here’s a quick graph showing the approximate number of days each major release since version 6.0 took:

Some interesting things can be seen here: there is a rough correlation between the complexity of a new release and the time it takes, major releases take longer, and the trend is gradually towards more days per release. Overall the project is doing great, releasing on average every 288 days since version 6. If we only look at version 7 and onwards, the releases are on average 367 days apart. If we look at just version 7, the average is 324 days. If we look at just version 8, the average is 410. Since the last major version that came out was on July 1, 2009, the numbers predict 9.0 will be released on July 3, 2010, based on the version 7 and 8 averages, and on August 15, 2010, based on just the version 8 averages. However, this upcoming version has two very major features, streaming replication (SR) and hot standby (HS). How those will affect the release schedule remains to be seen, but I suspect the 9.0 to 9.1 window will be short indeed. …

community database open-source postgres

LCA2010: Postgres represent!

I had the pleasure of attending and presenting at LinuxConf.AU this year in Wellington, NZ. Linux Conf.AU is an institution whose friendliness and focus on the practical business of creating and sustaining open source projects was truly inspirational.

My talk this year was “A Survey of Open Source Databases”, where I actually created a survey and asked over 35 open source database projects to respond. I have received about 15 responses so far, and also did my own research on the over 50 projects I identified. I created a place-holder site for my research at: ossdbsurvey.org. I’m hoping to revise the survey (make it shorter!!) and get more projects to provide information.

Ultimately, I’d like the site to be a central location for finding information and comparing different projects. Performance of each is a huge issue, and there are a lot of individuals constructing good (and bad) systems for comparing. I don’t think I want to dive into that pool, yet. But I would like to start collecting the work others have done in a central place. Right now it is really far too difficult to find all of this information.

Part of the talk was also a foray into the dangerous world of classification. …

postgres

Automatic migration from Slony to Bucardo

About a month ago, Bucardo added an interesting set of features in the form of a new script called slony_migrator.pl. In this post I’ll describe slony_migrator.pl and its three major functions.

The Setup

For these examples, I’m using the pagila sample database along with a set of scripts I wrote and made available here. These scripts build two different Slony clusters. The first is a simple one, which replicates this database from a database called “pagila1” on one host to a database “pagila2” on another host. The second is more complex. Its one master node replicates the pagila database to two slave nodes, one of which replicates it again to a fourth slave using Slony’s FORWARD function as described here. I implemented this setup on two FreeBSD virtual machines, known as myfreebsd and myfreebsd2. The reset-simple.sh and reset-complex.sh scripts in the script package I’ve linked to will build all the necessary databases from one pagila database and do all the Slony configuration.

Slony Synopsis

The slony_migrator.pl script has three possible actions, the first of which is to connect to a running Slony cluster and print a synopsis of the Slony setup it …

postgres bucardo replication

PostgreSQL tip: using pg_dump to extract a single function

A common task that comes up in PostgreSQL is the need to dump/edit a specific function. While ideally, you’re using DDL files and version control (hello, git!) to manage your schema, you don’t always have the luxury of working in such a controlled environment. Recent versions of psql have the \ef command to edit a function from within your favorite editor, but this is available from version 8.4 onward only.

An alternate approach is to use the following invocation:

pg_dump -Fc -s | pg_restore -P 'funcname(args)'The -s flag is the short form of –schema-only; i.e., we don’t care about wasting time/space with the data. -P tells pg_restore to extract the function with the following signature.

As always, there are some caveats: the function name must be spelled out explicitly using the full types as they occur in the dump’s custom format (i.e., you must use ‘foo_func(integer)’ instead of ‘foo_func(int)’). You can always see a list of all of the available functions by using the command:

pg_dump -Fc -s | pg_restore -l | grep FUNCTIONpostgres