PostgreSQL tip: arbitrary serialized rows

Sometimes when using PostgreSQL, you want to deal with a record in its serialized form. If you’re dealing with a specific table, you can accomplish this using the table name itself:

psql # CREATE TABLE foo (bar text, baz int);

CREATE TABLE

psql # INSERT INTO foo VALUES ('test 1', 1), ('test 2', 2);

INSERT 0 2

psql # SELECT foo FROM foo;

foo

--------------

("test 1",1)

("test 2",2)

(2 rows)This works fine for defined tables, but how to go about this for arbitrary SELECTs? The answer is simple: wrap in a subselect and alias as so:

psql # SELECT q FROM (SELECT 1, 2) q;

q

-------

(1,2)

(1 row)postgres tips

Spree on Heroku for Development

Yesterday, I worked through some issues to setup and run Spree on Heroku. One of End Point’s clients is using Spree for a multi-store solution. We are using the the recently released Spree 0.10.0.beta gem, which includes some significant Spree template and hook changes discussed here and here in addition to other substantial updates and fixes. Our client will be using Heroku for their production server, but our first goal was to work through deployment issues to use Heroku for development.

Since Heroku includes a free offering to be used for development, it’s a great option for a quick and dirty setup to run Spree non-locally. I experienced several problems and summarized them below.

Application Changes

1. After a failed attempt to setup the basic Heroku installation described here because of a RubyGems 1.3.6 requirement, I discovered the need for Heroku’s bamboo deployment stack, which requires you to declare the gems required for your application. I also found a Spree Heroku extension and reviewed the code, but I wanted to take a more simple approach initially since the extension offers several features that I didn’t need. After some testing, I created a .gems file in the main …

hosting rails spree

Riak Install on Debian Lenny

I’m doing some comparative analysis of various distributed non-relational databases and consequently wrestled with the installation of Riak on a server running Debian Lenny.

I relied upon the standard “erlang” debian package, which installs cleanly on a basically bare system without a hitch (as one would expect). However, the latest Riak’s “make” tasks fail to run; this is because the rebar script on which the make tasks rely chokes on various bad characters:

riak@nosql-01:~/riak$ make all rel

./rebar compile

./rebar:2: syntax error before: PK

./rebar:11: illegal atom

./rebar:30: illegal atom

./rebar:72: illegal atom

./rebar:76: syntax error before: ��n16

./rebar:79: syntax error before: ','

./rebar:91: illegal integer

./rebar:149: illegal atom

./rebar:160: syntax error before: Za��ze

./rebar:172: illegal atom

./rebar:176: illegal atom

escript: There were compilation errors.

make: *** [compile] Error 127Delicious.

Ultimately, I came across this article describing issues getting Riak to install on Ubuntu 9.04, and ultimately determined that the Erlang version mentioned seemed to apply here. Following the article’s instructions for building Erlang from source worked out …

database hosting nosql

PostgreSQL EC2/EBS/RAID 0 snapshot backup

One of our clients uses Amazon Web Services to host their production application and database servers on EC2 with EBS (Elastic Block Store) storage volumes. Their main database is PostgreSQL.

A big benefit of Amazon’s cloud services is that you can easily add and remove virtual server instances, storage space, etc. and pay as you go. One known problem with Amazon’s EBS storage is that it is much more I/O limited than, say, a nice SAN.

To partially mitigate the I/O limitations, they’re using 4 EBS volumes to back a Linux software RAID 0 block device. On top of that is the xfs filesystem. This gives roughly 4x the I/O throughput and has been effective so far.

They ship WAL files to a secondary server that serves as warm standby in case the primary server fails. That’s working fine.

They also do nightly backups using pg_dumpall on the master so that there’s a separate portable (SQL) backup not dependent on the server architecture. The problem that led to this article is that extra I/O caused by pg_dumpall pushes the system beyond its I/O limits. It adds both reads (from the PostgreSQL database) and writes (to the SQL output file).

There are several solutions we are considering so that …

aws cloud database hosting postgres scalability backups

More Code and SEO with the Google Analytics API

My latest blog article inspiration came from an SEOmoz pro webinar on Actionable Analytics. This time around, I wrote the article and it was published on SEOmoz’s YOUmoz Blog and I thought I’d summarize and extend the article here with some technical details more appealing to our audience. The article is titled Visualizing Keyword Data with the Google Analytics API.

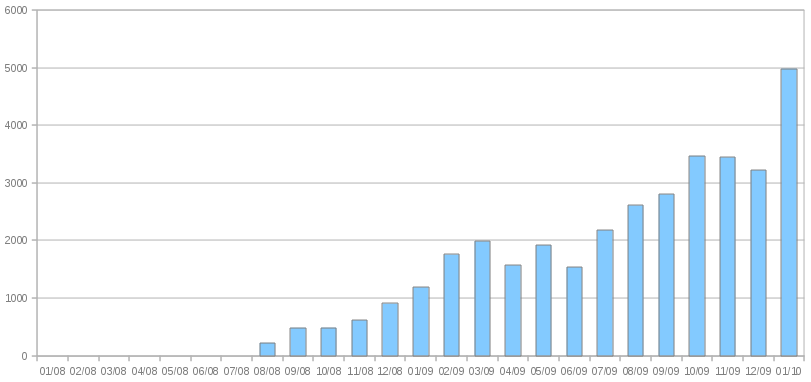

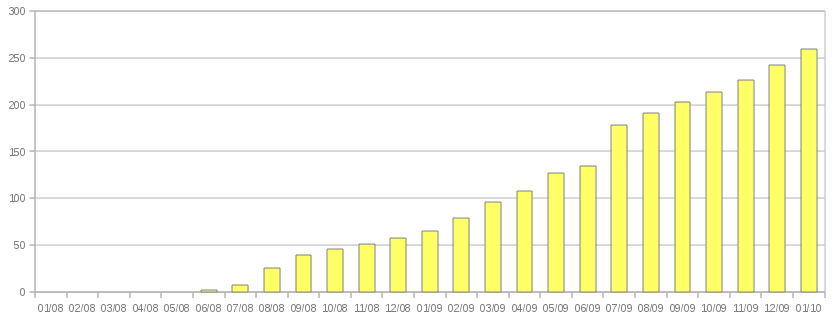

In the article, I discuss and show examples of how the number of unique keywords receiving search traffic has diversified or expanded over time and that our SEO efforts (including writing blog articles) are likely resulting in this diversification of keywords. Some snapshots from the articles:

[The unique keyword (keywords receiving at least one search visit) count per month (top) compared to the number of articles available on our blog at that time (bottom).]

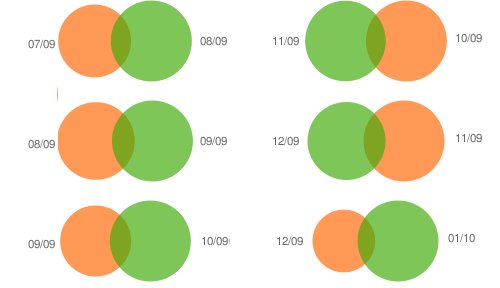

I also briefly examined how unique keywords receiving at least one visit overlapped between each month and saw about 10-20% of overlapping keywords (likely the short-tail of SEO).

[The keyword overlap per month, where the keywords receiving at least one visit in consecutive months are shown in the overlap section.]

Now, on to things that End Point’s audience …

analytics ecommerce seo

PostgreSQL tip: dump objects into a new schema

Sometimes the need arises to export a PostgreSQL database and put its contents into its own schema; say you’ve been busy developing things in the public schema. Sometime people suggest manipulating the pg_dump output either manually or using a tool such as sed or perl to explicitly schema-qualify all table objects, etc, but this is error-prone depending on your table names, and can be more trouble than its worth.

One trick that may work for you if your current database is not in use by anyone else is to rename the default public schema to your desired schema name before dumping, and then optionally changing it back to public afterward. This has the benefit that all objects will be properly dumped in the new schema (sequences, etc) and not just tables, plus you don’t have to worry about trying to parse SQL with regexes to modify this explicitly.

$ psql -c "ALTER SCHEMA public RENAME new_name"

$ pg_dump --schema=new_name > new_name_dump.sql

$ psql -c "ALTER SCHEMA new_name RENAME public"

$ # load new_name_dump.sql elsewhereCheers!

postgres tips

GNU diff: changing the output filenames

I was working on a script to monitor/compare remote versions of a file and compare against our local mirror; part of this work involved fetching the remote file to a temporary location and doing a diff -u against the local file to see if there were any changes. This worked fine, but the temporary filename was less-than descriptive.

The man page for diff was somewhat cryptic when it came to changing the displayed output filenames themselves, however based on some figuring-out, you can pass the -L (–label) flag to diff. You need to pass it twice; the first -L will replace the filename in the — output line and the second -L replaces the file in the +++ line.

$ wget -qO /tmp/grocerylist

$ diff -u /path/to/local/grocerylist -L /path/to/local/grocerylist /tmp/grocerylist -L http://mirrorsite.com/grocerylist

--- /path/to/local/grocerylist

+++ http://mirrorsite.com/grocerylist

@@ -11,7 +11,7 @@

potatoes

bread

eggs

- milk

+ soda

oranges

apples

celeryObvious shortcomings in this approach are the fact that you need to specify a redundant -L line to the first file; in my case, this was all handled programatically, so I just substituted the same parameter in both places. …

tips

MRM: The power of ‘random’, program maintenance, weave and electricity

Time for another installment of Monday Reading Material!

I’m in New Zealand (and across the dateline!) so this is appearing a day early for many of you :)

Reading material from last week:

-

The power of ‘random’. Work done with routers to combine messages (improve efficiency of transport) and then forward them at random on to another hop. Turned out this was better overall than trying to selectively forward to “most likely” next hops.

-

A Perl Programming maintenance checklist. Things you can do to make your code maintainable (in any language!)

-

Mozilla Weave. Bookmark sync!

-

How much energy How do I find out how much electricity something uses?. Great article on figuring this out. I have a kill a watt and need to investigate a few appliances I have at home.

tips