Bucardo replication workarounds for extremely large Postgres updates

Bucardo is very good at replicating data among Postgres databases (as well as replicating to other things, such as MariaDB, Oracle, and Redis!). However, sometimes you need to work outside the normal flow of trigger-based replication systems such as Bucardo. One such scenario is when many changes need to be made to your replicated tables. And by a lot, I mean many millions of rows. When this happens, it may be faster and easier to find an alternate way to replicate those changes.

When a change is made to a table that is being replicated by Bucardo, a trigger fires and stores the primary key of the row that was changed into a “delta” table. Then the Bucardo daemon comes along, gathers a list of all rows that were changed since the last time it checked, and pushes those rows to the other databases in the sync (a named replication set). Although all of this is done in a fast and efficient manner, there is a bit of overhead that adds up when (for example), updating 650 million rows in one transaction.

The first and best solution is to simply hand-apply all the changes yourself to every database you are replicating to. By disabling the Bucardo triggers …

bucardo postgres database

A caching, resizing, reverse proxying image server with Nginx

While working on a complex project, we had to set up a caching reverse proxying image server with the ability of automatically resize any cached image on the fly.

Looking around on the Internet, we discovered an amazing blog post describing how Nginx could do that with a neat Image Filter module capable of resizing, cropping and rotating images, creating an Nginx-only solution.

What we wanted

What we wanted to achieve in our test configuration was to have a URL like:

http://www.example.com/image/

…that would retrieve the image at:

…then resize it on the fly, cache it and serve it.

Our setup ended up being almost the same as the one in that blog post, with some slight differences.

Requirements installation

First, as the post points out, the Image Filter module is not installed by default on many Linux distributions. As we’re using Nginx’s official repositories, it was just a matter of installing the nginx_module_image_filter package and restarting the service.

Cache Storage configuration

Continuing following the post’s great instructions, we set up the cache in our main http section, tuning each parameter to fit ur specific needs. We …

nginx

How to Add Labels to a Dimple JS Line Chart

I was recently working on a project that was using DimpleJS, which the docs describe as “An object-oriented API for business analytics powered by d3”. I was using it to create a variety of graphs, some of which were line graphs. The client had requested that the line graph display the y-value of the line on the graph. This is easily accomplished with bar graphs in Dimple, however, not so easily done with line graphs.

I had spent some time Googling to find what others had done to add this functionality but could not find it anywhere. So, I read the documentation where they add labels to a bar graph, and “tweaked” it like so:

var s = myChart.addSeries(null, dimple.plot.line);

.

.

.

/*Add prices to line chart*/

s.afterDraw = function (shape, data) {

// Get the shape as a d3 selection

var s = d3.select(shape);

var i = 0;

_.forEach(data.points, function(point) {

var rect = {

x: parseFloat(point.x),

y: parseFloat(point.y)

};

// Add a text label for the value

if(data.markerData[i] != undefined) {

svg.append("text")

.attr("x", rect.x)

.attr("y", rect.y - 10)

// Centre align

.style("text-anchor", "middle") …visualization javascript

Randomized Queries in Ruby on Rails

I was recently asked about options for displaying a random set of items from a table using Ruby on Rails. The request was complicated by the fact that the technology stack hadn’t been completely decided on and one of the items still up in the air was the database. I’ve had an experience with a project I was working on where the decision was made to switch from MySQL to PostgreSQL. During the switch, a sizable amount of hand constructed queries stopped functioning and had to be manually translated before they would work again. Learning from that experience, I favor avoidance of handwritten SQL in my Rails queries when possible. This precludes the option to use built-in database functions like rand() or random().

With the goal set in mind, I decided to look around to find out what other people were doing to solve similar requests. While perusing various suggested implementations, I noticed a lot of comments along the lines of “Don’t use this approach if you have a large data set.” or “this handles large data sets, but won’t always give a truly random result.”

These comments and the variety of solutions got me thinking about evaluating based not only on what database is in use, but …

database ruby rails

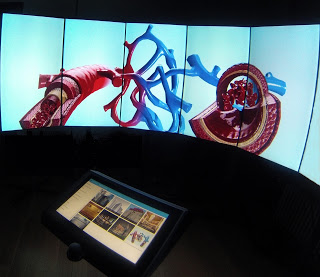

Sketchfab on the Liquid Galaxy

For the last few weeks, our developers have been working on syncing our Liquid Galaxy with Sketchfab. Our integration makes use of the Sketchfab API to synchronize multiple instances of Sketchfab in the immersive and panoramic environment of the Liquid Galaxy. The Liquid Galaxy already has so many amazing capabilities, and to be able to add Sketchfab to our portfolio is very exciting for us! Sketchfab, known as the “YouTube for 3D files,” is the leading platform to publish and find 3D and VR content. Sketchfab integrates with all major 3D creation tools and publishing platforms, and is the 3D publishing partner of Adobe Photoshop, Facebook, Microsoft HoloLens and Intel RealSense. Given that Sketchfab can sync with almost any 3D format, we are excited about the new capabilities our integration provides.

Sketchfab content can be deployed onto the system in minutes! Users from many industries use Sketchfab, including architecture, hospitals, museums, gaming, design, and education. There is a natural overlap between the Liquid Galaxy and Sketchfab, as members of all of these industries utilize the Liquid Galaxy for its visually stunning and immersive atmosphere.

We recently had …

visionport

Gem Dependency Issues with Rails 5 Beta

The third-party gem ecosystem is one of the biggest selling points of Rails development, but the addition of a single line to your project’s Gemfile can introduce literally dozens of new dependencies. A compatibility issue in any one of those gems can bring your development to a halt, and the transition to a new major version of Rails requires even more caution when managing your gem dependencies.

In this post I’ll illustrate this issue by showing the steps required to get rails_admin (one of the two most popular admin interface gems for Rails) up and running even partially on a freshly-generated Rails 5 project. I’ll also identify some techniques for getting unreleased and forked versions of gems installed as stopgap measures to unblock your development while the gem ecosystem catches up to the new version of Rails.

After installing the current beta3 version of Rails 5 with gem install rails –pre and creating a Rails 5 project with rails new I decided to address the first requirement of my application, admin interface, by installing the popular Rails Admin gem. The rubygems page for rails_admin shows that its most recent release 0.8.1 from mid-November 2015 lists Rails 4 as …

rails ruby

Postgres concurrent indexes and the curse of IIT

Postgres has a wonderful feature called concurrent indexes. It allows you to create indexes on a table without blocking reads OR writes, which is quite a handy trick. There are a number of circumstances in which one might want to use concurrent indexes, the most common one being not blocking writes to production tables. There are a few other use cases as well, including:

- Replacing a corrupted index

- Replacing a bloated index

- Replacing an existing index (e.g. better column list)

- Changing index parameters

- Restoring a production dump as quickly as possible

In this article, I will focus on that last use case, restoring a database as quickly as possible. We recently upgraded a client from a very old version of Postgres to the current version (9.5 as of this writing). The fact that use of pg_upgrade was not available should give you a clue as to just how old the “very old” version was!

Our strategy was to create a new 9.5 cluster, get it optimized for bulk loading, import the globals and schema, stop write connections to the old database, transfer the data from old to new, and bring the new one up for reading and writing.

The goal was to reduce the …

postgres

Cybergenetics Helps Free Innocent Man

We all love a good ending. I was happy to hear that one of End Point’s clients, Cybergenetics, was involved in a case this week to free a falsely imprisoned man, Darryl Pinkins.

Darryl was convicted of a crime in Indiana in 1991. In 1995 Pinkins sought the help of the Innocence Project. His attorney Frances Watson and her students turned to Cybergenetics and their DNA interpretation technology called TrueAllele® Casework. The TrueAllele DNA identification results exonerated Pinkins. The Indiana Court of Appeals dropped all charges against Pinkins earlier this week and he walked out of jail a free man after fighting for 24 years to clear his name.

TrueAllele can separate out the people who contributed their DNA to a mixed DNA evidence sample. It then compares the separated out DNA identification information to other reference or evidence samples to see if there is a DNA match.

End Point has worked with Cybergenetics since 2003 and consults with them on security, database infrastructure, and website hosting. We congratulate Cybergenetics on their success in being part of the happy ending for Darryl Pinkins and his family!

More of the story is available at Cybergenetics’ Newsroom or …

community clients