TriSano and Pentaho at our NYC company meeting

Josh Tolley just spoke to us about the TriSano open source project he works on. It helps track and report on public health events, using data at least partly gathered from doctors following special legal reporting requirements to look for epidemics.

A lot of this is about data warehousing. Public health officials collect a lot of data and want to easily report on it. Typically they use SPSS. Need to filter the data before doing analysis.

And what is a data warehouse? Store all your data in a different way that’s efficient for querying broken down by time (OLAP). Such queries don’t usually work very well in normal transactional (OLTP) database.

Dimension tables: E.g. different public health departments, sex, disease. Fact tables: Contains the numbers, facts that you may do math aggregation against, and links to dimension tables. The key to the whole process is deciding what you want to track.

Pentaho is what we use for the query interface. To get done what we need to do, we have to make use of unpublished APIs, using JRuby to interface between Pentaho (Java) and TriSano, a Rails app. Postgres is the database.

Brian Buchalter then took over and delved more into the TriSano Rails …

java open-source rails casepointer ruby development

Web service integration in PHP, jQuery, Perl and Interchange

Jeff Boes presented on one of his latest projects.

CityPass decided on a project to convert their checkout from being served by Interchange to have the interface served by PHP, but continue to interact with Interchange for the checkout process through a web service.

The original site was entirely served by Interchange, the client then took on a project to convert the frontend to PHP while leveraging Interchange for frontend logic such as pricing and shipping as well as for backend administration for order fulfillment.

Technologies used in the frontend rewrite:

- PHP

- jQuery for jStorage, back-button support and checkout business logic

- AJAX web services for prices, discounts, click-tracking

The Interchange handler is conduit.am that handles the processing of the URL. From this ActionMap the URLs are decoded and passed to a Perl module, Data.pm, which handles processing the input and returning the results.

An order is just a JSON object so testing of the web service is easy. We have a known hash, we post to the proper URL and compare the results and verify they are the same. New test cases are also easy, we can capture any order (JSON) to a log file and add it as a test case.

interchange javascript json perl php clients

Why Piggybak exists

There are some clients debating between using Spree, an e-commerce platform, and a homegrown Rails solution for an e-commerce application.

E-commerce platforms are monolithic—they try to solve a lot of different problems at once. Also, many of these e-commerce platforms frequently make premature decisions before getting active users on it. One way of making the features of a platform match up better to a user’s requirements is to get a minimal viable product out quick and grow features incrementally.

Piggybak was created by first trying to identify the most stable and consistent features of a shopping cart. Here are the various pieces of a cart to consider.

- Shipping

- Tax

- CMS Features

- Product Search

- Cart / Checkout

- Product Features

- Product Taxonomy

- Discount Sales

- Rights and Roles

What doesn’t vary? Cart & Checkout.

Shipping, tax, product catalog design, sales promotions, and rights and roles all vary across different e-commerce sites. The only strict commonality is the cart and the checkout.

Piggybak is just the cart and checkout.

You mount Piggybak as a gem into any Rails app, and can assign any object as a purchasable product using a the tag “acts_as_variant” and you’re …

ecommerce piggybak rails

2012 company meeting in New York City

All of us at End Point will have a change of pace this week. We are spread out in different locations around the world, so we don’t get to see everyone face to face as often as we’d like. This week we’re meeting up at our main office in New York City to spend three days in person, sharing knowledge and experiences with each other.

We’re looking forward to hearing from each other on a wide range of topics reflecting both the scope and depth of work we do:

- Recent major ecommerce projects including ground-up rebuilds, legacy system integrations, new payment processing options, and feature enhancements

- Liquid Galaxy deployments, logistics, hardware improvements, and custom tours

- Development process topics around workflow, version control, and testing

- Operations (and “DevOps”) topics including configuration management with Chef and Puppet, and monitoring with Nagios

- The Piggybak Rails ecommerce gem

- User experience in projects using RailsAdmin, Django, and jQuery

- Database and web application security

- Transitioning ecommerce architecture to modern browsers and mobile apps using web services

- And social events ranging from a little friendly technical competition to bowling to a picnic in …

company conference

Detecting Postgres SQL Injection

SQL injection attacks are often treated with scorn among seasoned DBAs and developers—“oh it could never happen to us!”. Until it does, and then it becomes a serious matter. It can, and most likely will eventually happen to you or one of your clients. It’s prudent to not just avoid them in the first place, but to be proactively looking for attacks, to know what to do when they occur, and know what steps to take after you have cleaned up the mess.

What is a SQL injection attack? Broadly speaking, it is a malicious user entering data to subvert the nature of your original query. This is almost always through a web interface, and involves an “unescaped” parameter that can be used to change the data returned or perform other database actions. The user “injects” their own SQL into your original SQL statement, changing the query from its original intent.

For example, you have a page in which the a logged-in customer can look up their orders by an order_number, a text field on a web form. The query thus looks like this in your code:

$order_id = cgi_param('order_number');

$sql = "SELECT * FROM order WHERE order_id = $order_id AND order_owner = '$username'"; …database monitoring postgres security

Devise on Rails: Prepopulating Form Data

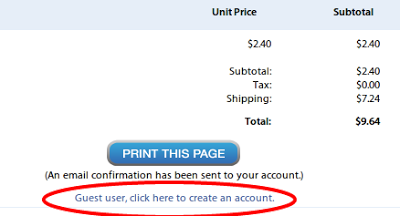

I recently had a unique (but reasonable) request from a client: after an anonymous/guest user had completed checkout, they requested that a “Create Account” link be shown on the receipt page which would prepopulate the user form data with the user’s checkout billing address. Their application is running on Ruby on Rails 3.2 and uses devise. Devise is a user authentication gem that’s popular in the Rails community.

A customer request was to include a link on the receipt page that would autopopulate the user create account form with checkout data.

Because devise is a Rails engine (self-contained Rails functionality), the source code is not included in the main application code repository. While using bundler, the version information for devise is stored in the application’s Gemfile.lock, and the engine source code is stored depending on bundler configuration. Because the source code does not live in the main application, modifying the behavior of the engine is not quite as simple as editing the source code. My goal here was to find an elegant solution to hook into the devise registration controller to set the user parameters.

ActiveSupport::Concern

To start off, I set up a …

rails

Integrating UPS Worldship - Pick and Pack

Using UPS WorldShip to automate a pick and pack scenario

There are many options when selecting an application to handle your shipping needs. Typically you will be bound to one of the popular shipping services; UPS, FedEx, or USPS or a combination thereof. In my experience UPS Worldship offers a very robust shipping application that is dynamic enough to accommodate integration with just about any custom or out of the box ecommerce system.

UPS Worldship offers many automating features by allowing you to integrate in many different ways. The two main automated features consist of batch label printing and individual label printing. I would like to cover my favorite way of using UPS Worldship that allows you to import and export data seamlessly.

You should choose the solution that works best for you and your shipping procedure. In this blog post I would like to discuss a common warehouse scenario refereed to as Pick And Pack. The basic idea of this scenario is an order is selected for a warehouse personnel to fulfill, it is then picked, packed, and shipped. UPS Worldship allows you to do this in a very automated way with a bit of customization. This is a great solution for a small to …

ecommerce shipping

Simple Pagination with AJAX

Here’s a common problem: you have a set of results you want to display (search results, or products in a category) and you want to paginate them in a way that doesn’t submit and re-display your results page every time. AJAX is a clear winner in this; I’ll outline a very simple, introductory approach for carrying this off.

(I’m assuming that the reader has some modest familiarity with JavaScript and jQuery, but no great expertise. My solutions below will tend toward the “Cargo Cult” programming model, so that you can cut and paste, tweak, and go, but with enough “how and why” sprinkled in so you will come away knowing enough to extend the solution as needed.)

Firstly, you have to have the server-side processing in place to serve up paginated results in a way you can use. We’ll assume that you can write or adapt your current results source to produce this for a given URL and parameters:

/search?param1=123¶m2=ABC&sort=colA,colB&offset=0&size=24That URL offers a state-less way to retrieve a slice of results: in this case, it corresponds to a query something like:

SELECT … FROM … WHERE param1='123' AND param2='ABC'

ORDER BY colA,colB OFFSET 0 LIMIT …javascript json