Python string formatting and UTF-8 problems workaround

Recently I worked on a program which required me to filter hundred of lines of blog titles. Throughout the assignment I stumbled upon a few interesting problems, some of which are outlined in the following paragraphs.

Non Roman characters issue

During the testing session I missed one title and investigating why it happened, I found that it was simply because the title contained non-Roman characters.

Here is the code’s snippet that I was previously using:

for e in results:

simple_author=e['author'].split('(')[1][:-1].strip()

if freqs.get(simple_author,0) < 1:

print parse(e['published']).strftime("%Y-%m-%d") , "--",simple_author, "--", e['title']And here is the fixed version

for e in results:

simple_author=e[ …python

Documenting web services with Perl POD and AJAX

Perl POD is a handy, convenient, but low-tech approach to embedded documentation. Consider a web service in Dancer:

get time => sub {

return scalar(localtime());

};(Disclaimer: my actual use-case of this technique was even more legacy: I was documenting Interchange Actionmaps that returned images, JSON, etc.)

Your application might have several, or even dozens of these, with various parameters, returning data in JSON or TXT or CSV or who-knows-what. I chose to document these in Perl POD (Plain Old Documentation) format, e.g.,

=pod

=head1 time

Retrieves the current time

=over 3

=item Parameters

None.

=item Example

=begin html

<script src="/js/example-time.js" type="text/javascript"></script>

=end html

=back

=cutThis block gets inserted right in-line with the web service code, so it’s immediately obvious to anyone maintaining it (and thus has the best chance of being maintained if and when the code changes!). Now I can generate an HTML page directly from my Perl code:

$ pod2html MyPackage.pmYour output looks something like this (excerpted for clarity):

time

Retrieves the current time Parameters

None.

Where the magic comes in …

dancer javascript perl

IPython Tips and Tricks

Recently I have been working on Python automation scripts. Very often I use IPython to develop/debug the code.

IPython is an advanced interactive python shell. It is a powerful tool which has many more features. However, here I would like to share some of the cool tricks of IPython.

Getting help

Typing object_name? will print all sorts of details about any object, including docstrings, function definition lines (for call arguments) and constructor details for classes.

In [1]: import datetime

In [2]: datetime.datetime?

Docstring:

datetime(year, month, day[, hour[, minute[, second[, microsecond[,tzinfo]]]]])

The year, month and day arguments are required. tzinfo may be None, or an

instance of a tzinfo subclass. The remaining arguments may be ints or longs.

File: /System/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/lib-dynload/datetime.so

Type: typeMagic commands

Edit

This will bring up an editor to type multiline code and execute the resulting code.

In [3]: %edit

IPython will make a temporary file named: /var/folders/xh/2m0ydjs51qxd_3y2k7x50hjc0000gn/T/ipython_edit_jnVJ51/ipython_edit_NdnenL.pyIn [3]: %edit -pThis will bring up the editor with the …

python tips tools

Heroku: dumping production database to staging

If you need to dump the production database locally Heroku has a nice set of tools to make this as smooth as humanly possible. In short, remember these two magic words: pg:pull and pg:push. This article details the process https://devcenter.heroku.com/articles/heroku-postgresql#pg-push-and-pg-pull

However, when I first tried it I had to resolved few issues.

My first problem was:

pg:pull not foundTo fix this:

- Uninstall the “heroku” gem with

gem uninstall heroku (Select 'All Versions')- Find your Ruby “bin” path by running

gem env(it’s under “EXECUTABLE DIRECTORY:”)

-

Cd to the “bin” folder.

-

Remove the Heroku executable with

rm heroku-

Restart your shell (close Terminal tab and re-open)

-

Type

heroku versionyou should now see something like:

heroku-toolbelt/2.33.1 (x86_64-darwin10.8.0) ruby/1.9.3Now you can proceed with the transfer:

- Type

heroku config --app production-appNote the DATABASE_URL, for example let’s imagine that the production database url is HEROKU_POSTGRESQL_KANYE_URL, and the staging database url is HEROKU_POSTGRESQL_NORTH

- Run

heroku pg:pull HEROKU_POSTGRESQL_KANYE rtwtransferdb --app production-app

heroku config --app staging-app …database heroku

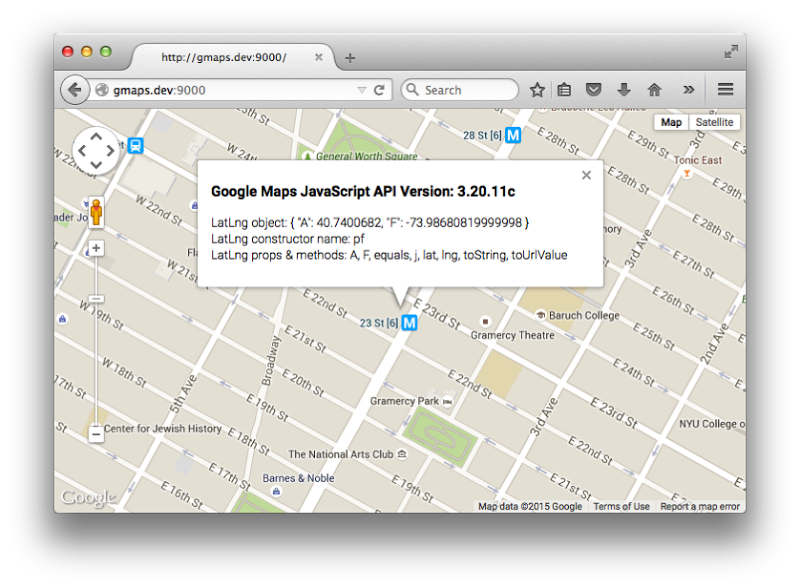

Google Maps JavaScript API LatLng Property Name Changes

Debugging Broken Maps

A few weeks ago I had to troubleshoot some Google Maps related code that had suddenly stopped working. Some debugging revealed the issue: the code adding markers to the page was attempting to access properties that did not exist. This seemed odd because the latitude and longitude values were the result of a geocoding request which was completing successfully. The other thing which stood out to me were the property names themselves:

var myLoc = new google.maps.LatLng(results[0].geometry.location.k, results[0].geometry.location.D);It looked like the original author had inspected the geocoded response, found the ‘k’ and ‘D’ properties which held latitude and longitude values and used them in their maps code. This had all been working fine until Google released a new version of their JavaScript API. Sites that did not specify a particular version of the API were upgraded to the new version automatically. If you have Google Maps code which stopped working recently this might be the reason why.

The Solution: Use the built-in methods in the LatLng class

I recalled there being some helper methods for LatLng objects and confirmed this with a visit to the docs for …

html javascript api

The Portal project — Jenkins Continuous Integration summary

This post describes some of our experiences at End Point in designing and working on comprehensive QA/CI facilities for a new system which is closely related to the Liquid Galaxy.

Due to the design of the system, the full deployment cycle can be rather lengthy and presents us with extra reasons for investing heavily in unit test development. Because of the very active ongoing development on the system we benefit greatly from running the tests in an automated fashion on the Jenkins CI (Continuous Integration) server.

Our Project’s CI Anatomy

Our Jenkins CI service defines 10+ job types (a.k.a. Jenkins projects) that cover our system. These job types differ as far as source code branches are concerned, as well as by combinations of the types of target environments the project builds are executed on.

The skeleton of a Jenkins project is what one finds under the Configure section on the Jenkins service webpage. The source code repository and branch are defined here. Each of our Jenkins projects also fetches a few more source code repositories during the build pre-execution phase.

The environment variables are defined in a flat text file:

Another configuration file is in the JSON …

chef browsers jenkins visionport python testing

MediaWiki complete test wiki via cloning

Being able to create a quick copy of your MediaWiki site is an important skill that has many benefits. Any time you are testing an upgrade, whether major or minor, it is great to be able to perform the upgrade on a test site first. Tracking down bugs becomes a lot easier when you can add all the debugging statements you need and not worry about affecting any of the users of your wiki. Creating and modifying extensions also goes a lot smoother when you can work with an identical copy of your production wiki. I will outline the steps I use to create such a copy, also known as a “test wiki”.

Before creating a copy, there are two things that should be done to an existing MediaWiki installation: use git, and move the images directory. By “use git”, I mean to put your existing mediawiki directory (e.g. where your LocalSettings.php file lives) into version control. Because the MediaWiki software is not that large, it is simplest to just add nearly everything into git, with the exception of the images and the cache information. Here is a recipe to do just that:

$ cd /var/www/mediawiki

$ git init .

Initialized empty Git repository in …mediawiki

Updated NoSQL benchmark: Cassandra, MongoDB, HBase, Couchbase

Back in April, we published a benchmark report on a number of NoSQL databases including Cassandra MongoDB, HBase, and Couchbase. We endeavored to keep things fair and configured as identically as possible between the database engines. But a short while later, DataStax caught two incorrect configuration items, in Cassandra and HBase, and contacted us to verify the problem. Even with the effort we put in to keeping everything even, a couple erroneous parameters slipped through the cracks! I’ll save the interesting technical details for another post coming soon, but once that was confirmed we jumped back in and started work on getting corrected results.

With the configuration fixed we re-ran a full suite of tests for both Cassandra and HBase. The updated results have published a revised report that you can download in PDF format from the DataStax website (or see the overview link).

The revised results still show Cassandra leading MongoDB, HBase, and Couchbase in the various YCSB tests.

For clarity the paper also includes a few additional configuration details that weren’t in the original report. We regret any confusion caused by the prior report, and worked as quickly as possible …

benchmarks big-data database nosql cassandra mongodb couchdb