Spree vs Magento: A Feature List Comparison

Note: This article was written in June of 2010. Since then, there have been several updates to Spree. Check out the current Official Spree Extensions or review a list of all the Spree Extensions.

This week, a client asked me for a list of Spree features both in the core and in available extensions. I decided that this might be a good time to look through Spree and provide a comprehensive look at features included in Spree core and extensions and use Magento as a basis for comparison. I’ve divided these features into meaningful broader groups that will hopefully ease the pain of comprehending an extremely long list :) Note that the Magento feature list is based on their documentation. Also note that the Spree features listed here are based on recent 0.10.* releases of Spree.

Features on a Single Product or Group of Product

| Feature | Spree | Magento |

| Product reviews and/or ratings | Y, extension | Y |

| Product qna | N | N |

| Product seo (url, title, meta data control) | N | Y |

| Advanced/flexible taxonomy | Y, core | Y |

| Seo for taxonomy pages | N | Y |

| Configurable product search | Y, core | Y |

| Bundled products for discount | Y, extension | Y |

| Recently viewed products | Y, extension | Y |

| Soft product support/downloads | Y, extension | Y, I think so |

| Product … |

ecommerce ruby rails spree cms magento

Tracking Down Database Corruption With psql

I love broken Postgres. Really. Well, not nearly as much as I love the usual working Postgres, but it’s still a fantastic learning opportunity. A crash can expose a slice of the inner workings you wouldn’t normally see in any typical case. And, assuming you have the resources to poke at it, that can provide some valuable insight without lots and lots of studying internals (still on my TODO list.)

As a member of the PostgreSQL support team at End Point a number of diverse situations tend to cross my desk. So imagine my excitement when I get an email containing a bit of log output that would normally make a DBA tremble in fear:

LOG: server process (PID 10023) was terminated by signal 11

LOG: terminating any other active server processes

FATAL: the database system is in recovery mode

LOG: all server processes terminated; reinitializingOops, signal 11 is SIGSEGV, Segmentation Fault. Really not supposed to happen, especially in day to day activities. That’ll cause Postgres to drop all of its current sessions and restart itself, as the log lines indicate. That crash was in response to a specific query their application was running, which essentially runs a process on a column …

postgres

The PGCon “Hall Track”

One of my favorite parts of PGCon is always the “hall track”, a general term for the sideline discussions and brainstorming sessions that happen over dinner, between sessions (or sometimes during sessions), and pretty much everywhere else during the conference. This year’s hall track topics seemed to be set by the developers’ meeting; everywhere I went, someone was talking about hooks for external security modules, MERGE, predicate locking, extension packaging and distribution, or exposing transaction order for replication. Other developers’ pet projects that didn’t appear in the meeting showed up occasionally, including unlogged tables and range types. Even more than, for instance, the wiki pages describing the things people plan to work on, these interstitial discussions demonstrate the vibrancy of the community and give a good idea just how active our development really is.

This year I shared rooms with Robert Haas, so I got a good overview of his plans for global temporary and unlogged tables. I spent a while with Jeff Davis looking through the code for exclusion constraints and deciding whether it was realistically possible to cause a starvation problem with many concurrent …

community conference database open-source postgres

Postgres Conference — PGCon2010 — Day Two

Day two of the PostgreSQL Conference started a little later than the previous day in obvious recognition of the fact that many people were up very, very late the night before. (Technically, this is day four, as the first two days consisted of tutorials; this was the second day of “talks”.)

The first talk I went to was PgMQ: Embedding messaging in PostgreSQL by Chris Bohn. It was well attended, although there were definitely a lot of late-comers and bleary eyes. A tough slot to fill! Chris is from Etsy.com and I’ve worked with him there, although I had no interaction with the PgMQ project, which looks pretty cool. From the talk description:

PgMQ (PostgreSQL Message Queueing) is an add-on that embeds a messaging client inside PostgreSQL. It supports the AMQP, STOMP and OpenWire messaging protocols, meaning that it can work with all of the major messaging systems such as ActiveMQ and RabbitMQ. PgMQ enables two replication capabilities: “Eventually Consistent” Replication and sharding.

As near as I can tell, “eventually consistent” is the same as “asynchronous replication”: the slave won’t be the same as the master right away, but will be eventually. As with Bucardo and Slony, the …

community conference database open-source postgres bucardo replication

Spree and Multi-site Architecture for Ecommerce

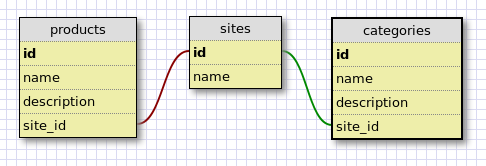

Running multiple stores from a single ecommerce platform instance seems to be quite popular these days. End Point has worked with several clients in developing a multi-store architecture running from one Interchange installation. In the case of our work with Backcountry.com, the data structure requires that a site_id column be included in product and navigation tables to specify which stores products and categories belong to. Frontend views are generated and “partial views” or “view components” are organized into a per-site directory structure and database calls request products against the current site_id. Below is a simplified view of the data structure used for Backcountry.com’s multi-site architecture.

Basic multi-store data architecture

A similar data model was implemented and enhanced for another client, College District. A site_id column exists in multiple tables including the products and navigation tables, and sites can only access data in the current site schema. College District takes one step further in development for appearance management by storing CSS values in the database and enabling CSS stylesheet generation on the fly with the stored CSS settings. This …

ecommerce ruby rails spree

PostgreSQL Conference - PGCon 2010 - Day One

The first day of talks for PGCon 2010 is now over, here’s a recap of the parts that I attended.

On Wednesday, the developer’s meeting took place. It was basically 20 of us gathered around a long conference table, with Dave Page keeping us to a strict schedule. While there were a few side conversations and contentious issues, overall we covered an amazing amount of things in a short period of time, and actually made action items out of almost all of them. My favorite decision we made was to finally move to git, something myself and others have been championing for years. The other most interesting parts for me were the discussion of what features we will try to focus on for 9.1 (it’s an ambitious list, no doubt), and DDL triggers! It sounds like Jan Wieck has already given this a lot of thought, so I’m looking forward to working with him in implementing these triggers (or at least

nagging him about it if he slows down). These triggers will be immensely useful to replication systems like Bucardo and Slony, which implement DDL replication in a very manual and unsatisfactory way. These triggers will not be like the current triggers, in that they will not be directly attached to …

community conference database open-source postgres mongodb

PostgreSQL 8.4 on RHEL 4: Teaching an old dog new tricks

So a client has been running a really old version of PostgreSQL in production for a while. We finally got the approval to upgrade them from 7.3 to the latest 8.4. Considering the age of the installation, it should come as little surprise that they had been running a similarly ancient OS: RHEL 4.

Like the installed PostgreSQL version, RHEL 4 is ancient—5 years old. I anticipated that in order to get us to a current version of PostgreSQL, we’d need to resort to a source build or rolling our own PostgreSQL RPMs. Neither approach was particularly appealing.

While the age/decrepitude of the current machine’s OS came as little surprise, what did come as a surprise was that there were supported RPMs available for RHEL 4 in the community Yum RPM repository (modulo your architecture of choice).

In order to get things installed, I followed the instructions for installing the specific yum repo. There were a few seconds where I was confused because the installation command was giving a “permission denied” error when attempting to install the 8.4 PGDG rpm as root. A little brainstorming and a lsattr later revealed that a previous administrator, apparently in the quest for über-security, had …

database postgres redhat

PostgreSQL switches to Git

Looks like the Postgres project is finally going to be bite the bullet and switch to git as the canonical VCS. Some details are yet to be hashed out, but the decision has been made and a new repo will be built soon. Now to lobby to get that commit-with-inline-patches list to be created…

database git postgres