Vim tip of the day: tabbed editing

How many tabs does your browser have open? I have 17 tabs open in Firefox presently (and opened / closed about 12 while writing this post). Most users will agree that tabs have changed the way they use the Web. Even IE, which has spawned a collection of shells for tabbed browsing, now supports it natively. Tabs allow for a great saving in screen real-estate, and in many cases, better interaction among the various open documents. Considering how much time programmers spend in their text editors, it therefore seems logical that the editor should provide the same functionality.

And the Vim developers agree. Although Vim calls them “tab-pages”, the functionality is there, waiting to be used. Before reading any further, ensure that your Vim supports tabs. You can do this by running this command, on anything resembling Unix:

vim --version | fgrep +windowsIf you don’t see any output, check your vendor’s packaging system for something like vim-full. If you don’t have a Vim available with the windows feature, go get one and come back.

Now that you can use tabs, let’s get started. One way to open tabs is via the command line. Vim uses the -p option to determine how many tab-pages to open. …

tips vim

Interchange jobs caveat

I’d used Interchange’s jobs feature to handle sending out email expirations and re-invites for a client. However I found out the hard way that scratch variables persisted between individual sub-jobs in the job set. I’d tested each of the two sub-jobs in isolation and had had no issues.

This bit me because I’d assumed each job component was run in isolation and variables were initialized with sensible (aka empty) content. In my case it fortunately only affected the reporting of each piece of the job system, but definitely could have affected larger pieces of the system.

The lessons? 1) Always explicitly initialize your variables; you don’t know the ultimate context they’ll be run in. 2) Individual component testing is no substitute for testing a system as a whole; you can reveal bugs that would otherwise slip through.

interchange

Vim Tip of the Day: running external commands in a shell

A common sequence of events when editing files is to make a change and then need to test by executing the file you edited in a shell. If you’re using Vim, you could suspend your session (ctrl-Z), and then run the command in your shell.

That’s a lot of keystrokes, though.

So, instead, you can use Vim’s built-in “run a shell command”!

:!{cmd}Run a shell command, shows you the output and prompts you before returning to your current buffer.

Even sweeter, is to use the Vim special character for current filename: %

Here’s :! % in action!

A few more helpful shortcuts related to executing things in the shell:

:!By itself, runs the last external command (from your shell history):!!Repeats the last command:silent !{cmd}Eliminates the need to hit enter after the command is done:r !{cmd}Puts the output of $cmd into the current buffer.

tips vim

Emacs Tip of the Day: ediff-revision

I recently discovered a cool feature of emacs: M-x ediff-revision. This launches the excellent ediff-mode with the defined version control system’s concept of revision spelling. In my case, I was wanting to compare all changes between two git branches introduced several commits ago relative to each branches’ head.

M-x ediff-revision prompted for a filename (defaulting to the current buffer’s file) and two revision arguments, which in vc-git’s case ends up being anything recognized by git rev-parse. So I was able to provide the simple revisions master^ and otherbranch^{4} and have it Do What I Mean™.

I limited the diff hunks in question to those matching specific regexes (different for each buffer) and was able to quickly and easily verify that all of the needed changes had been made between each of the branches.

As usual, C-h f ediff-revision is a good jumping off point for finding more about this useful editor command, as is C-h f ediff-mode for finding more about ediff-mode in general.

git emacs

pg_controldata

PostgreSQL ships with several utility applications to administer the server life cycle and clean up in the event of problems. I spent some time lately looking at what is probably one of the least well known of these, pg_controldata. This useful utility dumps out a number of useful tidbits about a database cluster, given the data directory it should look at. Here’s an example from a little-used 8.3.6 instance:

josh@eddie:~$ pg_controldata

pg_control version number: 833

Catalog version number: 200711281

Database system identifier: 5291243377389434335

Database cluster state: in production

pg_control last modified: Mon 09 Mar 2009 04:05:23 PM MDT

Latest checkpoint location: 0/B70E5B9C

Prior checkpoint location: 0/B70E5B5C

Latest checkpoint's REDO location: 0/B70E5B9C

Latest checkpoint's TimeLineID: 1

Latest checkpoint's NextXID: 0/307060

Latest checkpoint's NextOID: 37410

Latest checkpoint's NextMultiXactId: 1

Latest checkpoint's NextMultiOffset: 0

Time of latest checkpoint: Fri 06 Mar 2009 02:27:02 PM MST

Minimum recovery ending location: …postgres

Scout barcode artistry

Once upon a time, UPC barcodes had to be pretty large for the barcode readers to work. That made the barcode roughly square. Some years ago newer standards came out and the barcodes were still the same width to maintain compatibility, but they could now be shorter, presumably because scanning technology had improved.

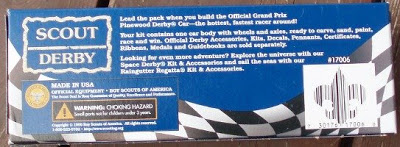

The combination of packaging that had space allocated for a tall barcode with the new reality that barcodes didn’t have to be tall was an invitation to creativity, as evidenced by the barcode on the box of this Scout Pinewood Derby kit I noticed today:

I love it—and the barcode is the only place on the box that the Scout emblem appears at all! Here it is closer up:

Has anyone seen this kind of artistically subverted but still functional barcode anywhere else?

art

Apache RewriteRule to a destination URL containing a space

Today I needed to do a 301 redirect for an old category page on a client’s site to a new category which contained spaces in the filename. The solution to this issue seemed like it would be easy and straight forward, and maybe it is to some, but I found it to be tricky as I had never escaped a space in an Apache RewriteRule on the destination page.

The rewrite rule needed to rewrite:

/scan/mp=cat/se=Video Gamesto:

/scan/mp=cat/se=DS Video GamesI was able to get the first part of the rewrite rule quickly:

^/scan/mp=cat/se=Video\sGames\.html$The issue was figuring out how to properly escape the space on the destination page. A literal space, %20 and \s all failed to work properly. Jon Jensen took a look and suggested a standard Unix escape of ‘\ ’ and that worked. Some times a solution is right under your nose and it’s obvious once you step back or ask for help from another engineer. Googling for the issue did not turn up such a simple solution, thus the reason for this blog posting.

The final rule:

RewriteRule ^/scan/mp=cat/se=Video\sGames\.html$ http://www.site.com/scan/mp=cat/se=DS\ Video\ Games.html [L,R=301]hosting seo

Passenger and SELinux

We recently ran into an issue when launching a client’s site using Phusion Passenger where it would not function with SELinux enabled. It ended up being an issue with Apache having the ability to read/write the Passenger sockets. In researching the issue we found another engineer had reported the problem and there was discussion about having the ability to configure where the sockets could be placed. This solution would allow someone to place the sockets in a directory other than /tmp and set the context on the directory so that sockets created within it have the same context and then grant httpd the ability to read/write to sockets with that specific context. This is a win over granting httpd the ability to read/write to all sockets in /tmp since many other services place their sockets there and you may not want httpd to be able to read/write to those sockets.

End Point had planned to take on the task of patching passenger and submitting the patch. While collecting information about the issue this morning to pass to Max I found this in the issue tracker for Passenger:

Comment 4 by honglilai, Feb 21, 2009 Implemented.

Status: Fixed

Labels: Milestone-2.1.0Excellent! We’ll be …

environment rails