PostgreSQL startup Debian logging failure

I ran into issues with debugging why a fresh PostgreSQL replica wasn’t starting on Debian. This was with a highly-customized postgresql.conf file with custom logging location, data_directory, etc. set.

The system log files were not showing any information about the failed pg_ctlcluster output, nor was there any information in /var/log/postgresql/ or the defined log_directory.

I was able to successfully create a new cluster with pg_createcluster and see logs for the new cluster in /var/log/postgresql/. The utility pg_lsclusters showed both clusters in the listing, but the initial cluster was still down, showing up with a custom log location. After reviewing the Debian wrapper scripts (fortunately written in Perl) I disabled log_filename, log_directory, and logging_collector, leaving log_destination = stderr. I was then finally able to get log information spit out to the terminal.

In this case, it was due to a fresh Amazon EC2 instance lacking appropriate sysctl.conf settings for kernel.shmmax and kernel.shmall. This particular error occurred before the logging was fully set up, which is why we did not get logging information in the postgresql.conf-designated location.

Once I had the …

postgres

Tickle me Postgres: Tcl inside PostgreSQL with pl/tcl and pl/tclu

Although I really love Pl/Perl and find it the most useful language to write PostgreSQL functions in, Postgres has had (for a long time) another set of procedural languages: Pl/Tcl and Pl/TclU. The Tcl language is pronounced “tickle”, so those two languages are pronounced as “pee-el-tickle” and “pee-el-tickle-you”. The pl/tcl languages have been around since before any others, even pl/perl; for a long time in the early days of Postgres using pl/tclu was the only way to do things “outside of the database”, such as making system calls, writing files, sending email, etc.

Sometimes people are surprised when they hear I still use Tcl. Although it’s not as widely mentioned as other procedural languages, it’s a very clean, easy to read, powerful language that shouldn’t be overlooked. Of course, with Postgres, you have a wide variety of languages to write your functions in, including:

The nice thing about Tcl is that not only is it an easy language to write in, it’s fully supported by Postgres. Only three languages are maintained inside the Postgres tree itself: Perl, Tcl, …

database postgres

LinuxFest Northwest: PostgreSQL 9.0 upcoming features

Once again, LinuxFest Northwest provided a full track of PostgreSQL talks during their two-day conference in Bellingham, WA.

Gabrielle Roth and I presented our favorite features in 9.0, including a live demo of Hot Standby with streaming replication! We also demonstrated features like:

- the newly improved ‘set storage MAIN’ behavior (TOAST related)

- ‘samehost’ and ‘samenet’ designations to pg_hba.conf (see CIDR-address section)

- Log changed parameter values when postgresql.conf is reloaded

- Allow EXPLAIN output in XML, JSON, and YAML formats (which our own Greg Sabino Mullane worked on!

- Allow NOTIFY to pass an optional string to listeners

- And of course—Hot Standby and Streaming Replication

The full feature list is available at on the developer site right now!

postgres

Viewing Postgres function progress from the outside

Getting visibility into what your PostgreSQL function is doing can be a difficult task. While you can sprinkle notices inside your code, for example with the RAISE feature of plpgsql, that only shows the notices to the session that is currently running the function. Let’s look at a solution to peek inside a long-running function from any session.

While there are a few ways to do this, one of the most elegant is to use Postgres sequences, which have the unique property of living “outside” the normal MVCC visibility rules. We’ll abuse this feature to allow the function to update its status as it goes along.

First, let’s create a simple example function that simulates doing a lot of work, and taking a long time to do so. The function doesn’t really do anything, of course, so we’ll throw some random sleeps in to emulate the effects of running on a busy production machine. Here’s what the first version looks like:

DROP FUNCTION IF EXISTS slowfunc();

CREATE FUNCTION slowfunc()

RETURNS TEXT

VOLATILE

SECURITY DEFINER

LANGUAGE plpgsql

AS $BC$

DECLARE

x INT = 1;

mynumber INT;

BEGIN

RAISE NOTICE 'Start of function';

WHILE x <= 5 LOOP

-- Random number from 1 to 10 …database postgres

Make git grep recurse into submodules

If you’ve done any major work with projects that use submodules, you may have been surprised that git grep will fail to return matches that match in a submodule itself. If you go into the specific submodule directory and run the same git grep command, you will be able to see the results, so what to do in that case?

Fortunately, git submodule has a subcommand which lets us execute arbitrary commands in all submodule repos, intuitively named git submodule foreach.

My first attempt at a command to search in all submodules was:

$ git submodule foreach git grep {pattern}This worked fine, except when {pattern} was multiple words or otherwise needed shell escaping. My next attempt was:

$ git submodule foreach git grep "{pattern}"This properly passed the escapes to the shell (ending up with “‘multi word phrase’” in my case), however an additional problem surfaced; the return value of the command resulted in an abort of the foreach loop. This was solved via:

$ git submodule foreach "git grep {pattern}; true"A more refined version could be created as a git alias, automatically escape its arguments, and union with the results of git grep, thus providing the …

git

Spree and Authorize.Net: Authorization and Capture Quick Tip

Last week I did a bit of reverse engineering on payment configuration in Spree. After I successfully setup Spree to use Authorize.net for a client, the client was unsure how to change the Authorize.Net settings to perform an authorize and capture of the credit card instead of an authorize only.

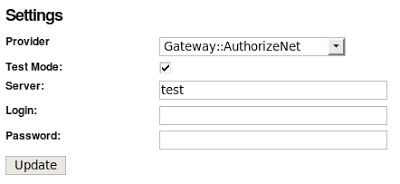

The requested settings for an Authorize.Net payment gateway on the Spree backend.

I researched in the Spree documentation for a bit and then sent out an email to the End Point team. Mark Johnson responded to my question on authorize versus authorize and capture that the Authorize.Net request type be changed from “AUTH-ONLY” to “AUTH_CAPTURE”. So, my first stop was a grep of the activemerchant gem, which is responsible for handling the payment transactions in Spree. I found the following code in the gem source:

# Performs an authorization, which reserves the funds on the customer's credit card, but does not

# charge the card.

def authorize(money, creditcard, options = {})

post = {}

add_invoice(post, options)

add_creditcard(post, creditcard)

add_address(post, options)

add_customer_data(post, options)

add_duplicate_window(post)

commit('AUTH_ONLY', money, post)

end

# …ecommerce rails spree

jQuery UI Sortable Tips

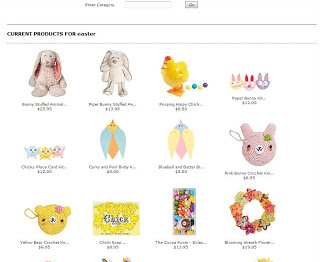

I was recently tasked with developing a sorting tool to allow Paper Source to manage the sort order in which their categories are displayed. They had been updating a sort column in a database column but wanted a more visual aspect to do so. Due to the well-received feature developed by Steph, it was decided that they wanted to adapt their upsell interface to manage the categories. See here for the post using jQuery UI Drag Drop.

The only backend requirements were that the same sort column was used to drive the order. The front end required the ability to drag and drop positions within the same container. The upsell feature provided a great starting point to begin the development. After a quick review I determined that the jQuery UI Sortable function would be more favorable to use for the application.

Visual feedback was used to display the sorting in action with:

// on page load

$('tr.the_items td').sortable({

opacity: 0.7,

helper: 'clone',

});

// end on page load

Secondly I reiterate “jQuery UI Event Funtionality = Cool”

I only needed to use one function for this application to do the arrange the sorting values once the thumbnail had been dropped. This code …

browsers javascript jquery

PostgreSQL at LinuxFest Northwest

This is my third year driving up to Bellingham for LinuxFest Northwest, and I’m excited to be presenting two talks about PostgreSQL there. Adrian Klaver is one of the organizers of the conference, and has always been a huge supporter of PostgreSQL. He has gone out of his way to have a track of content about our favorite database.

I’ll be presenting an introduction to Bucardo and co-hosting a talk about new features in version 9.0 of PostgreSQL with Gabrielle Roth.

Talking about Bucardo and replication is always a blast. The last time I gave this talk to a packed house in Seattle, so I’m hoping for another lively discussion about the state of replication in PostgreSQL.

conference postgres bucardo