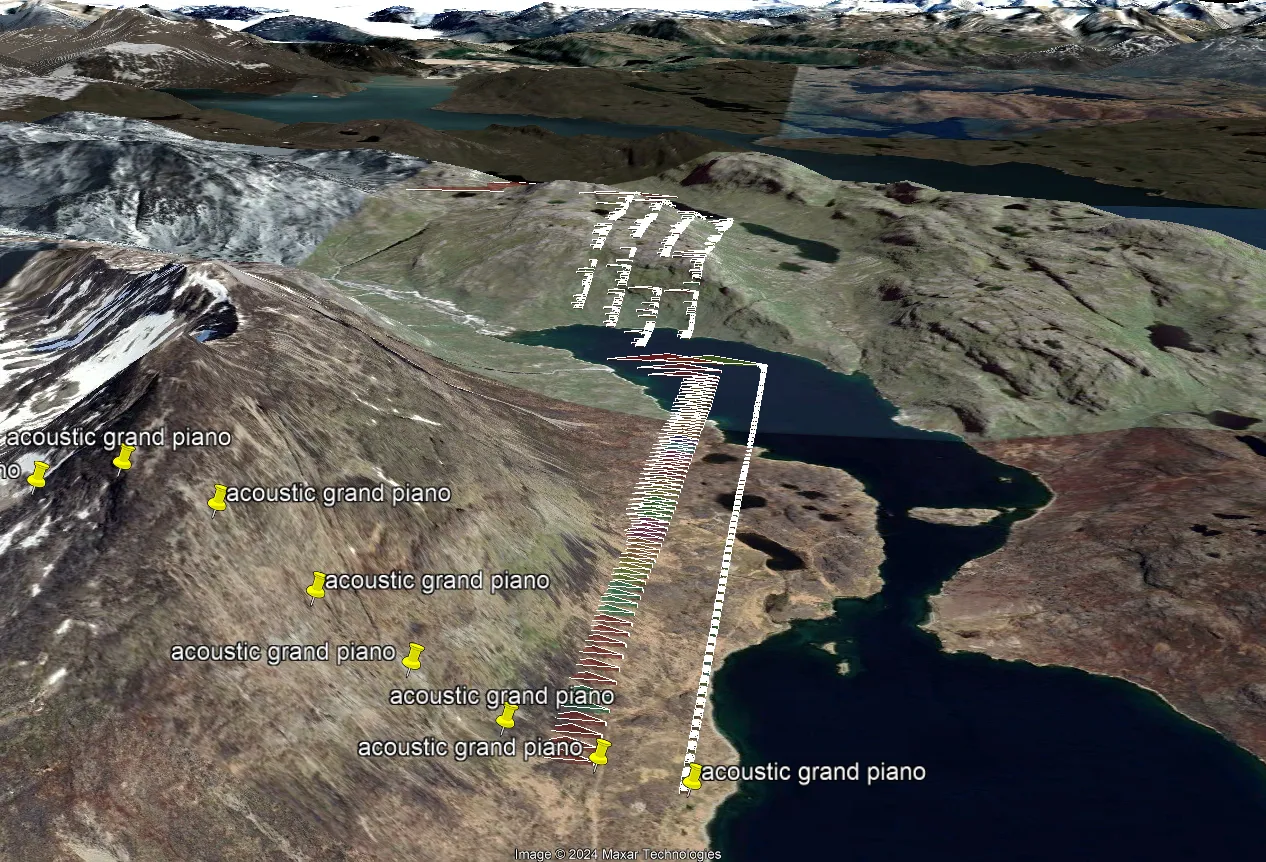

Converting MIDI to KML using AI: Bach’s Notes in the Hills of Greenland

I have always been interested in ways of representing music visually. Aside from conventional music notation, I imagined other cross-modal generation methods that could take a sound and generate an image. In the same vein, I have frequently envisioned a 3D landscape in which you could discover musical “objects”.

Well, now I’ve realized a version of this dream — with caveats which will be mentioned later. In this blog I would like to demonstrate how I used AI (in my case ChatGPT using GPT-4 Turbo) to create an interesting JavaScript application from just a few phrases. In this case, we will be making an application that can take as input an existing piece of music represented by a MIDI file and as output, create a KML file that you can view as 3D objects somewhere on the globe.

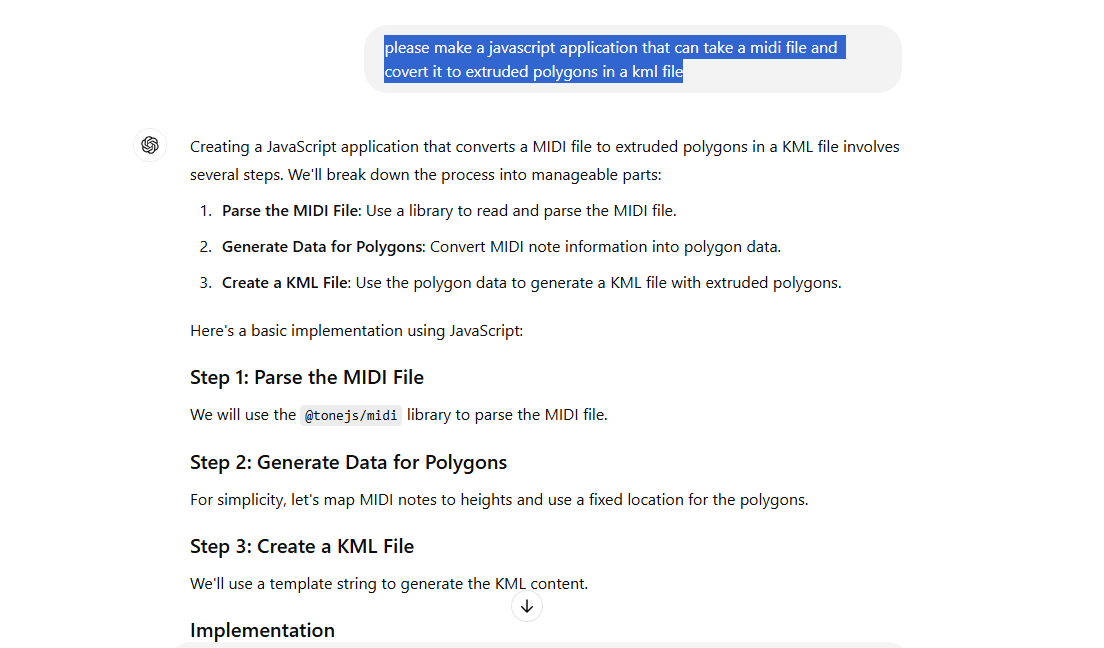

Here is how I enlisted ChatGPT to help me:

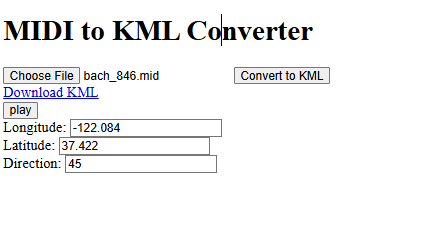

please make a javascript application that can take a MIDI file and covert it to extruded polygons in a kml fileHere is a part of its response:

I was amazed. It included code to select the MIDI file, convert it to KML, and generate an output file. Plus, ChatGPT correctly interpreted my request despite my “covert” typo. :-)

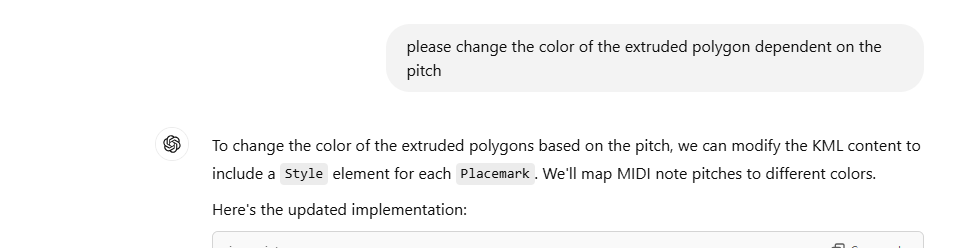

Before testing it I was interested in having the color of the extruded polygon be dependent on the pitch of the note. So next I entered:

please change the color of the extruded polygon dependent on the pitchHere is what it said:

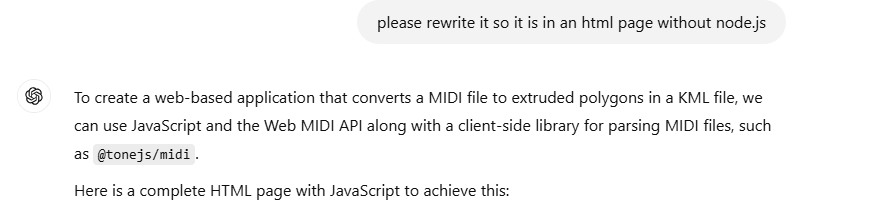

It was implemented using npm and Node.js, which seemed excessive for this small application. To make an easier-to-run version in a single html file, I entered:

please rewrite it so it is in an html page without node.js

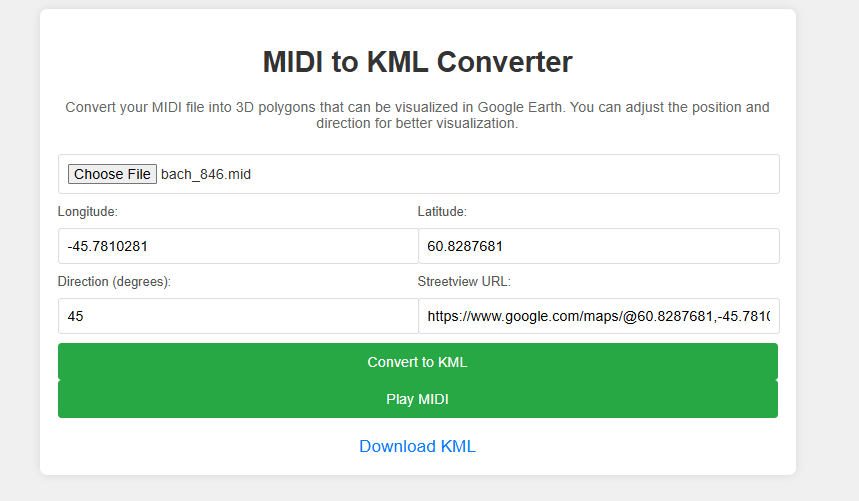

I thought it would be nice to be able to place the polygons, easily, wherever you wanted to, in the world.

I asked it to take a Google Street View position from Google Maps and decode the latitude, longitude, and heading.

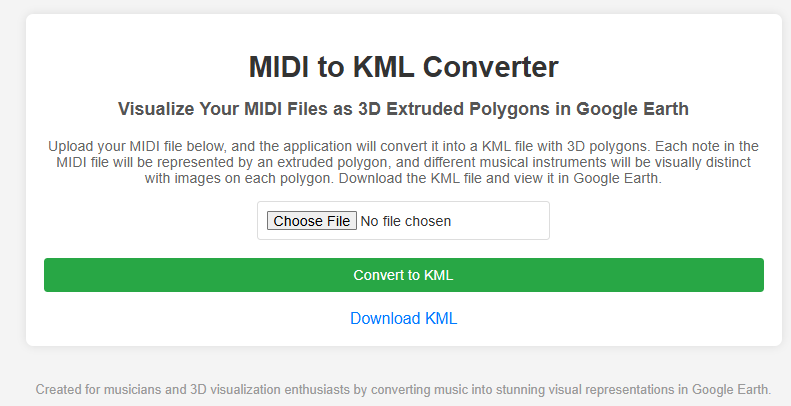

Next, I asked it to make a nicer user interface. It “understood” the purpose of the application very well and came up with a very nice interface explaining what it does.

How to try out the application

You can try out a version of the application at https://darius.endpointdev.com/midi2kml/midi2kml_improved.html.

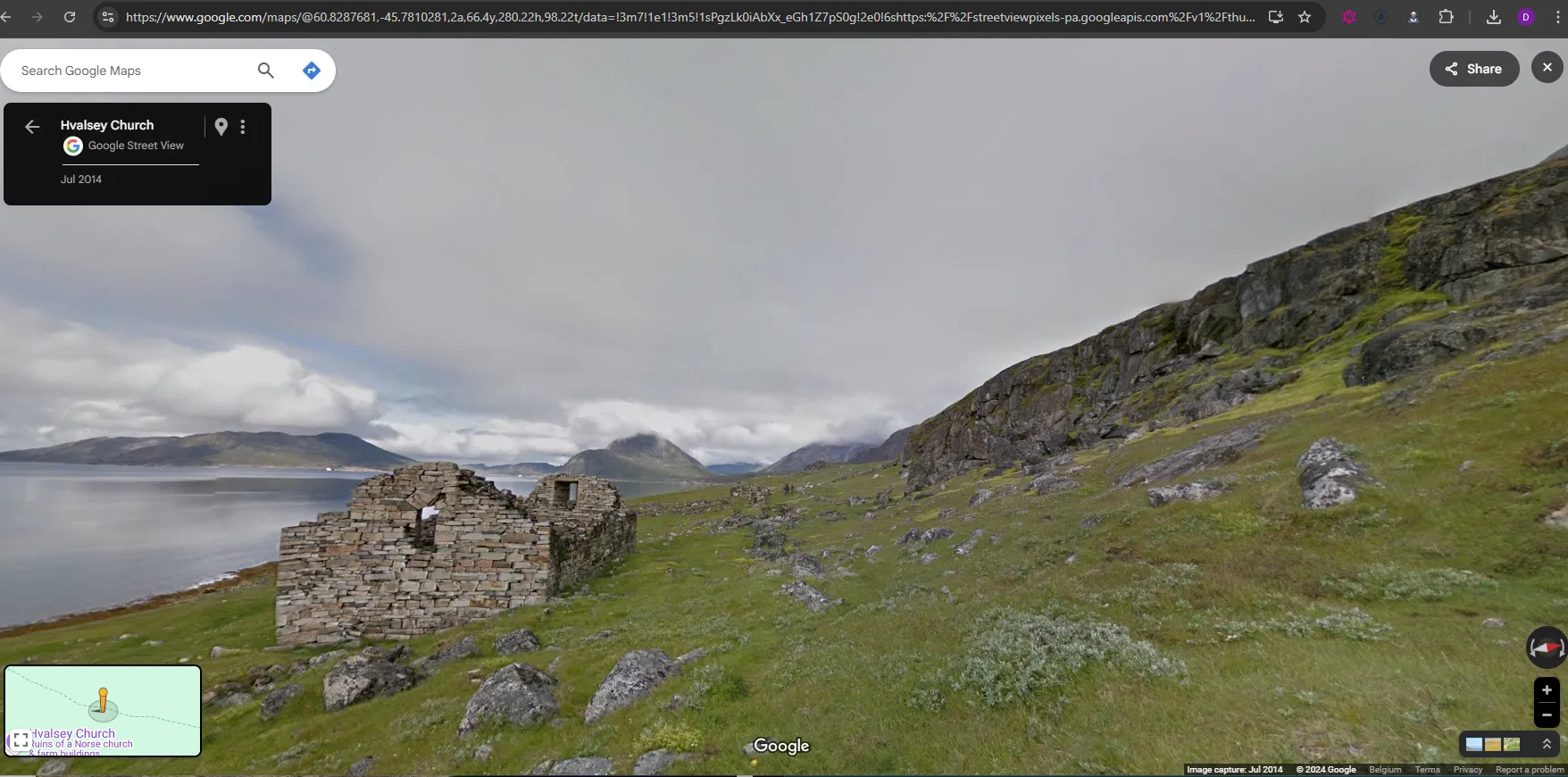

- Find a nice place to have the MIDI data displayed. Copy the URL and paste it into the Street View URL field.

For example, here is a place in Greenland:

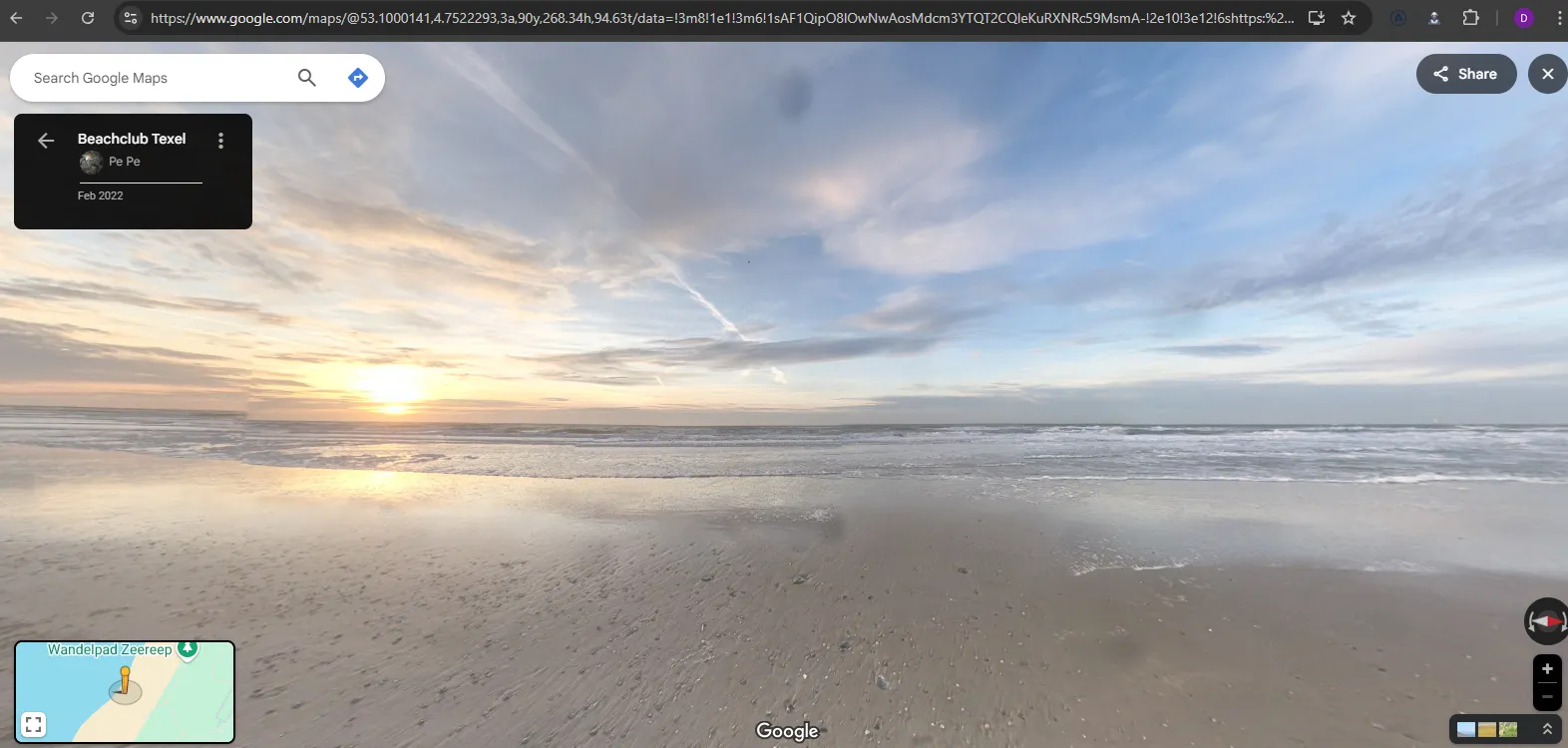

And here’s one on the beach in Texel in the Netherlands:

-

Find a nice MIDI file like Bach’s prelude and fugue in C major. Download it and put it in the “choose MIDI file” field

-

Press “Convert to KML”

-

Click on the “download KML” link

-

Open the KML file in a KML viewer or by manually by loading it into a program like Google Earth or Cesium.

Issues

This application works at least partially — it puts triangular prisms for each MIDI track, over time, and shows different colors for different notes (though the color–note correlation is not clear). There are plenty of issues and questions, however:

- There are lots of overlapping polygons. It’s unclear whether these are just errors, or represent different dynamics or articulations

- The “Play MIDI” button doesn’t work — it plays tones of some kind, but in my testing it was either a single bell sound, or computer noise reminiscent of an AOL dial-up modem.

- When I tested it on an orchestral score (Mozart’s Requiem, Kyrie), the instrument label pins were off to the side, not indicating which line of notes they corresponded to, and were mostly labeled “acoustic grand piano,” which is not an instrument included in the MIDI file.

Using AI for blog post image processing

As an aside, I created this blog using Google Docs with PNG pictures embedded in it. Our blog structure requires a Markdown document with separate WebP images. Google Docs has a nice Markdown export function, but it converts the PNG images into embedded Base64-encoded PNG. So I asked ChatGPT to extract the Base64-encoded PNG and create WebP files. It created a Python script that did this perfectly:

import os

import base64

import re

from PIL import Image

from io import BytesIO

# Path to your Markdown file

md_file_path = "example.md"

# Directory to store extracted and converted WEBP images

output_dir = "converted_images"

os.makedirs(output_dir, exist_ok=True)

# Load the Markdown content

with open(md_file_path, "r") as md_file:

content = md_file.read()

# Regex to find base64-encoded PNGs in the Markdown

base64_pattern = re.compile(

r"\[.*?\]:\s*<data:image/png;base64,([A-Za-z0-9+/=]+)>"

)

# Initialize a counter for naming the files

counter = 1

for match in base64_pattern.finditer(content):

print(f"Match found: ")

base64_data = match.group(1)

print(base64_data)

# Decode the base64 string to a PNG image

png_data = base64.b64decode(base64_data)

png_image = Image.open(BytesIO(png_data))

# Save the PNG as a WEBP file

webp_name = f"image_{counter}.webp"

webp_path = os.path.join(output_dir, webp_name)

png_image.save(webp_path, "WEBP")

# Replace the base64 PNG in the Markdown with a reference to the WEBP file

content = content.replace(match.group(0), f"")

counter += 1

# Save the updated Markdown file

updated_md_path = os.path.splitext(md_file_path)[0] + "_updated.md"

with open(updated_md_path, "w") as updated_md_file:

updated_md_file.write(content)

print(f"Updated Markdown file saved: {updated_md_path}")

print(f"WEBP images saved in: {output_dir}")Unfortunately, when Google Docs embeds the images, it seems to downsize the resolution, so for this post, old-school image processing it is!

kml gis artificial-intelligence visionport

Comments