Knocking on Kubernetes’s Door (Ingress)

Photo by Alberto Capparelli

According to the Merriam-Webster dictionary, the meaning of ingress is the act of entering or entrance. In the context of Kubernetes, Ingress is a resource that enables clients or users to access the services which reside in a Kubernetes cluster. Thus Ingress is the entrance to a Kubernetes cluster! Let’s get to know more about it and test it out.

Prerequisites

We are going to deploy Nginx Ingress at Kubernetes on Docker Desktop. Thus the following are the requirements:

- Docker Desktop with Kubernetes enabled. If you are not sure how to do this, please refer to my previous blog on Docker Desktop and Kubernetes.

- Internet access to download the required YAML and Docker Images.

gitcommand to clone a Git repository.- A decent editor such as Vim or Notepad++ to view and edit the YAML.

Ingress and friends

To understand why we need Ingress, we need to know 2 other resources and their shortcomings in exposing Kubernetes services. Those 2 resources are NodePort and LoadBalancer. Then we will go over the details of Ingress.

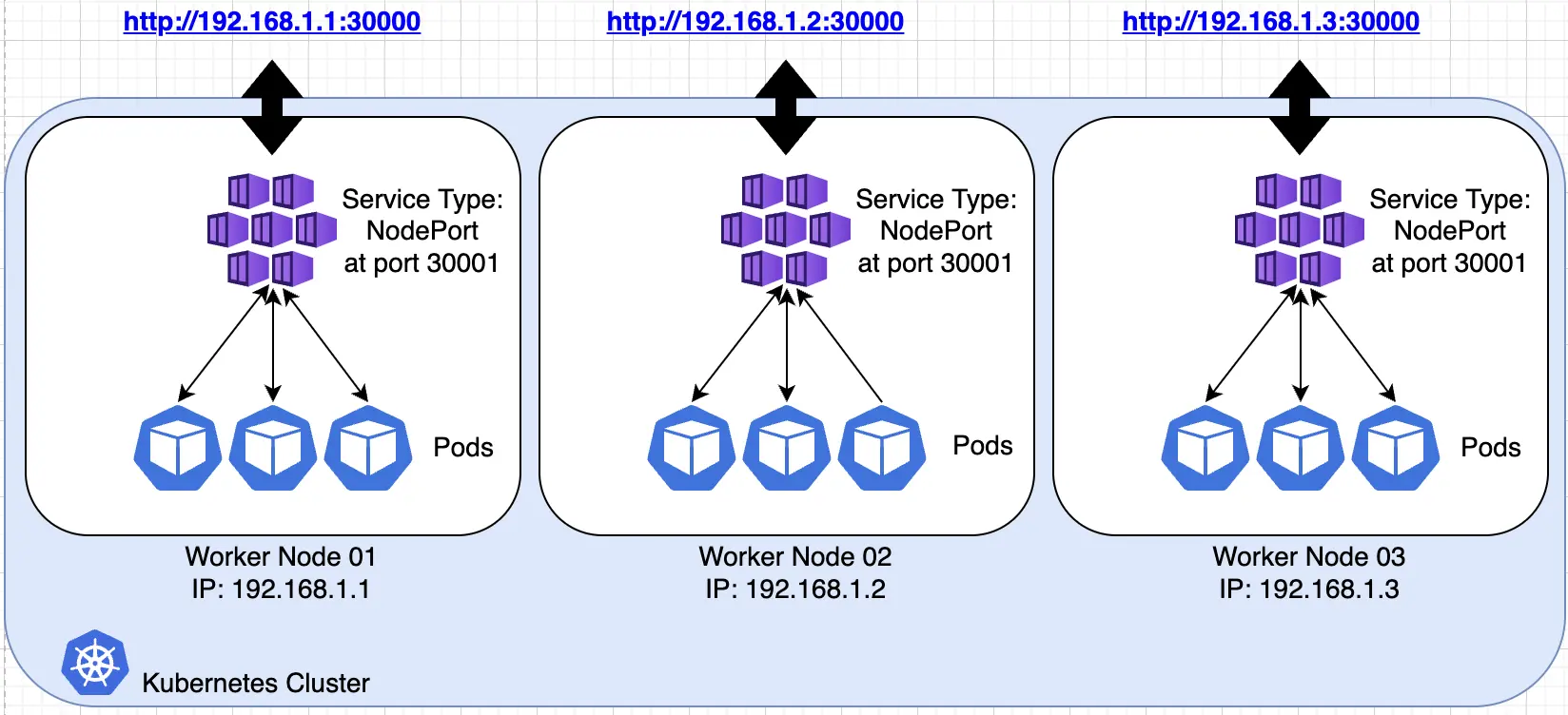

NodePort

NodePort is a type of Kubernetes service which exposes the Kubernetes application at high-numbered ports. By default the range is from 30000–32767. Each of the worker nodes proxies the port. Thus, access to the service is by using the Kubernetes worker node IPs and the ports. In the following example the NodePort service is exposed at port 30000.

To have a single universal access and a secured SSL connection, we need some external load balancer in front of the Kubernetes cluster to do the SSL termination and to load balance the exposed IPs and ports from the worker nodes. This is illustrated in the following diagram:

LoadBalancer

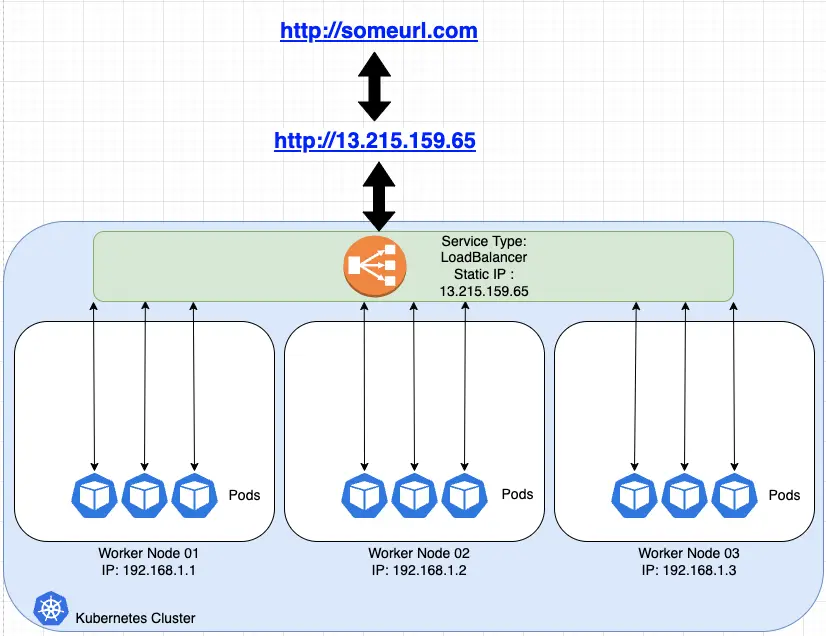

LoadBalancer is another type of Kubernetes service which exposes Kubernetes services. Generally it is an OSI layer 4 load balancer which exposes a static IP address. The implementation of LoadBalancer depends on the Cloud or the Infrastructure provider, thus the capability of LoadBalancer varies.

In the following example a LoadBalancer is exposed with the static public IP address 13.215.159.65 provided by a cloud provider. The IP could also be registered in DNS to allow resolution by a host name.

Ingress

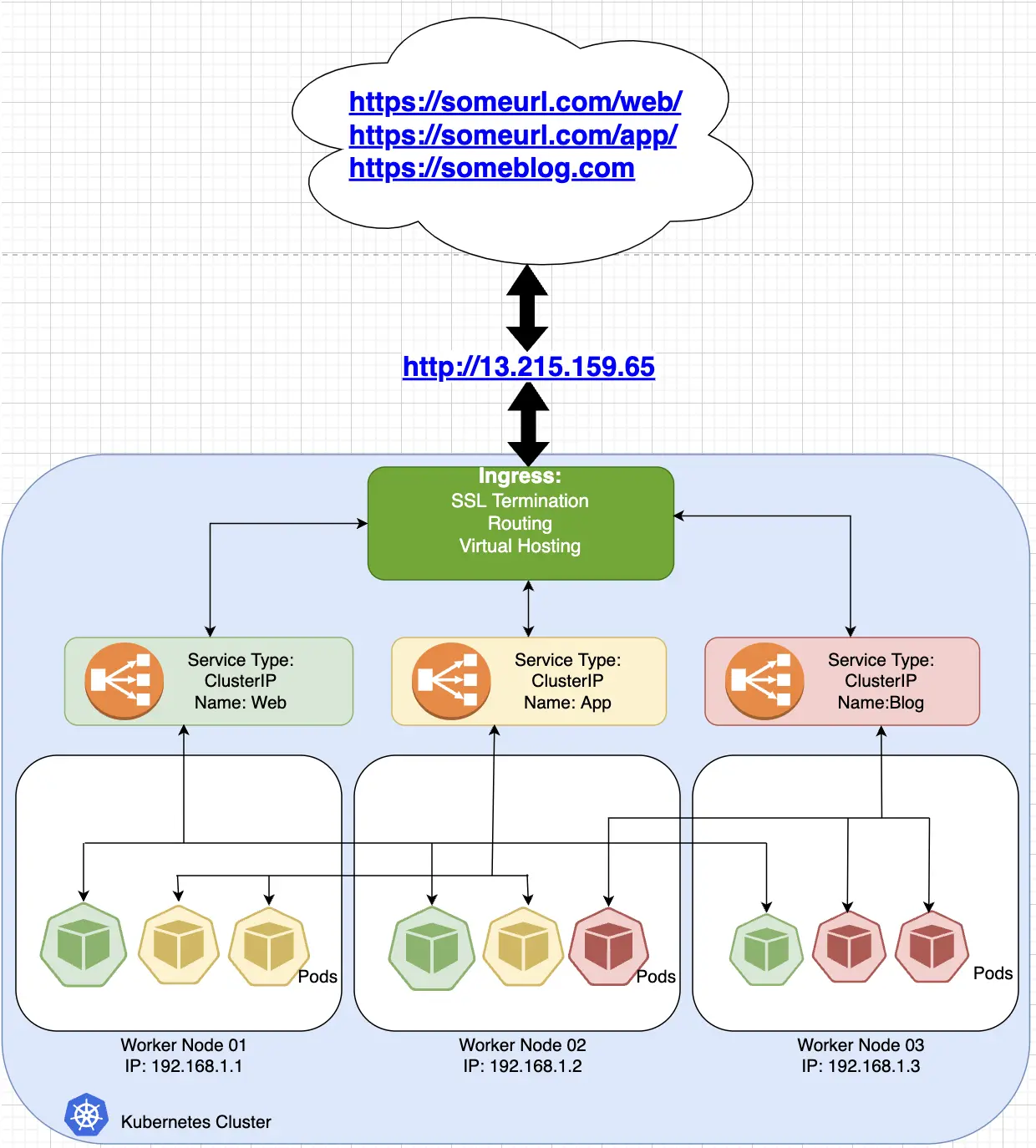

Ingress is a Kubernetes resource that serves as an OSI layer 7 load balancer. Unlike NodePort and LoadBalancer, Ingress is not a Kubernetes service. It is another Kubernetes resource that sits in front of a Kubernetes service. It enables routing, SSL termination, and virtual hosting. This is like a full-fledged load balancer inside the Kubernetes cluster!

The following diagram shows that Ingress is able to route the someurl.com/web/ and someurl.com/app/ endpoints to the intended applications in the Kubernetes cluster, able to terminate SSL certificates, do virtual hosting and route the URL to the intended destination. Please take note that as of this writing, Ingress only supports the http and https protocols.

In order to get Ingress in a Kubernetes cluster we need to deploy 2 main things:

- Ingress Controller is the engine of the Ingress. It is responsible for providing the Ingress capability to Kubernetes. The Ingress Controller is a separate module from Kubernetes core components. There are multiple Ingress Controllers available to use such as Nginx, Istio, NSX, and many more. See a complete list at the kubernetes.io page on Ingress controllers.

- Ingress Resource is the configuration that manages the Ingress. It is made by applying the Ingress Resource YAML. This is a typical YAML file for Kubernetes resources which requires apiVersion, kind, metadata and spec. Go to kubernetes.io documentation on Ingress to learn more.

How to deploy and use Ingress

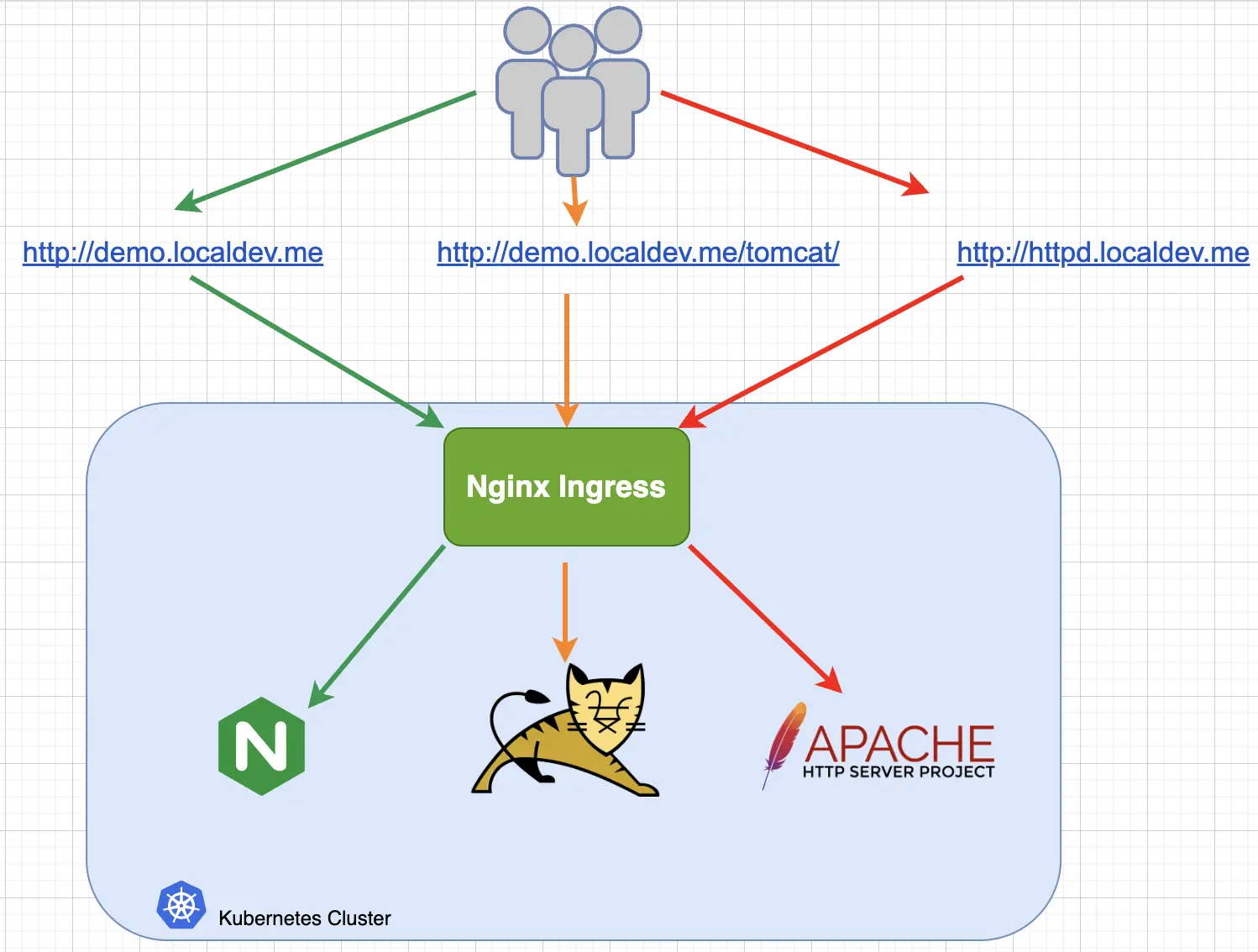

Now we are going to deploy the Nginx Ingress at Kubernetes in Docker Desktop. We will configure it to access an Nginx web server, a variant for Tomcat web application server and our old beloved Apache web server.

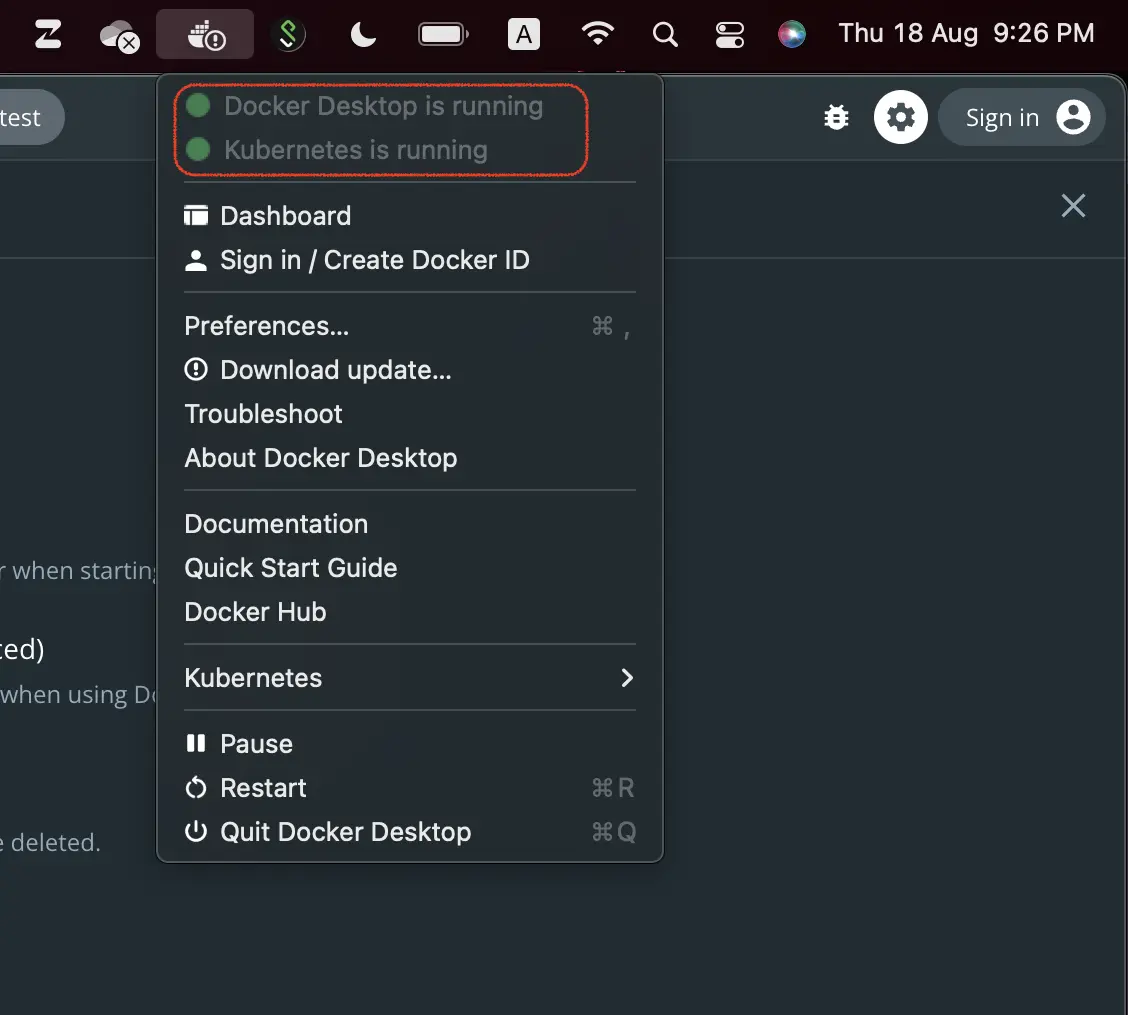

Start your Docker Desktop with Kubernetes Enable. Right click at the Docker Desktop icon at the top right area of your screen (near the left in this cropped screenshot) to see that both Docker and Kubernetes are running:

Clone my repository to get the required deployments YAML files:

git clone https://github.com/aburayyanjeffry/nginx-ingress.git

Let’s go through the downloaded files.

01-ingress-controller.yaml: This is the main deployment YAML for the Ingress controller. It will create a new namespace named “ingress-nginx”. Then it will create the required service account, role, rolebinding, clusterrole, clusterolebinding, service, configmap, and deployments in the “ingress-nginx” namespace. This YAML is from the official ingress-nginx documentation. To learn more about this deployment see the docs.02-nginx-webserver.yaml,03-tomcat-webappserver.yaml,04-httpd-webserver.yaml: These are the deployment YAML files for the sample applications. They are the typical Kubernetes configs which contain the services and deployments.05-ingress-resouce.yaml: This is the configuration of the Ingress. It is using the test domain*.localdev.me. This is a domain that is available in most modern operating systems. It can be used for testing without the need to edit the/etc/hostsfile. Ingress is configured to route as the following diagram:

Deploy the Ingress Controller. Execute the following to deploy the Ingress Controller:

kubectl apply -f 01-ingress-controller.yaml

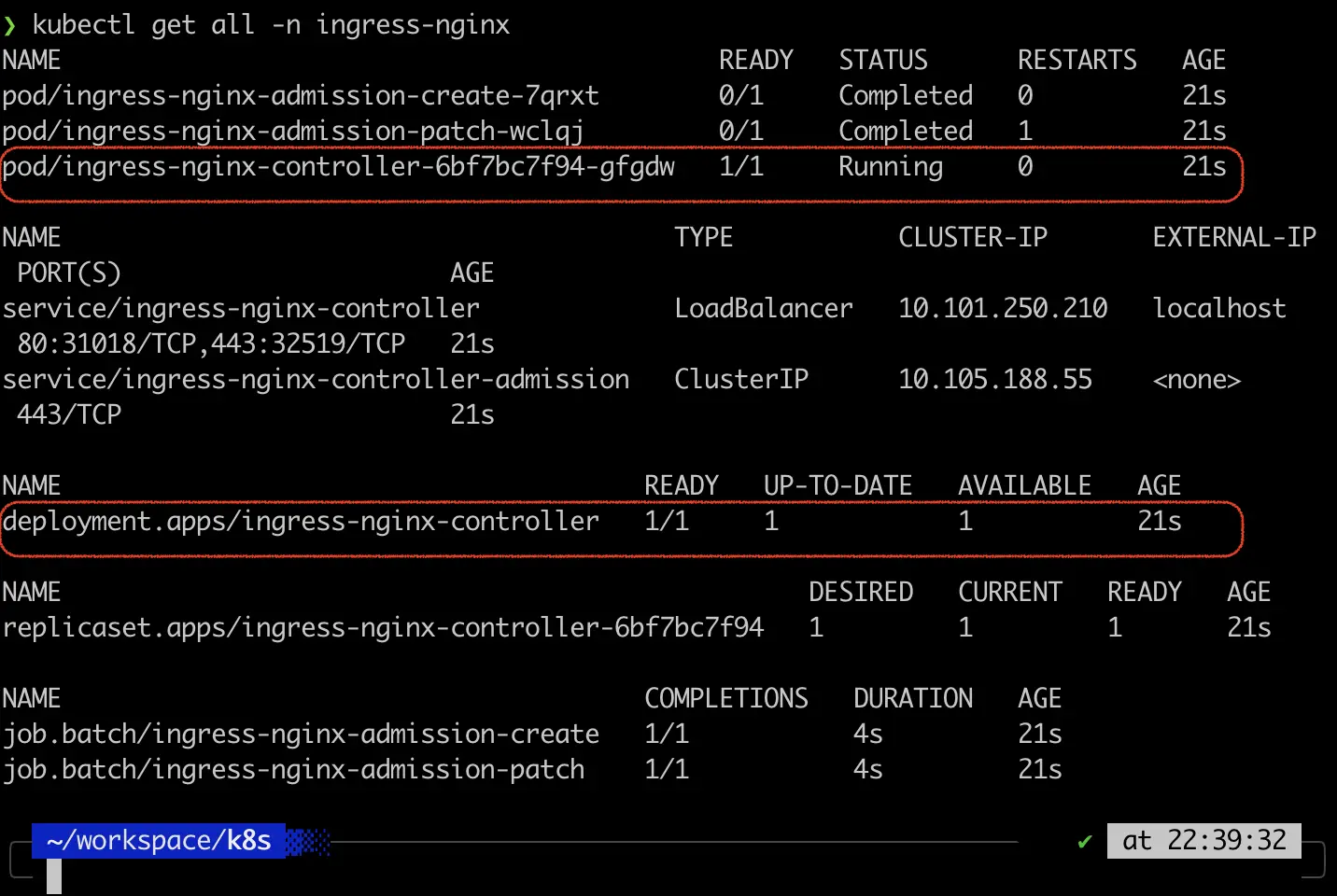

Execute the following to check on the deployment. The pod must be running and the Deployment must be ready:

kubectl get all -n ingress-nginx

Deploy the sample applications. Execute the following to deploy the sample applications:

kubectl apply -f 02-nginx-webserver.yaml

kubectl apply -f 03-tomcat-webappserver.yaml

kubectl apply -f 04-httpd-webserver.yaml

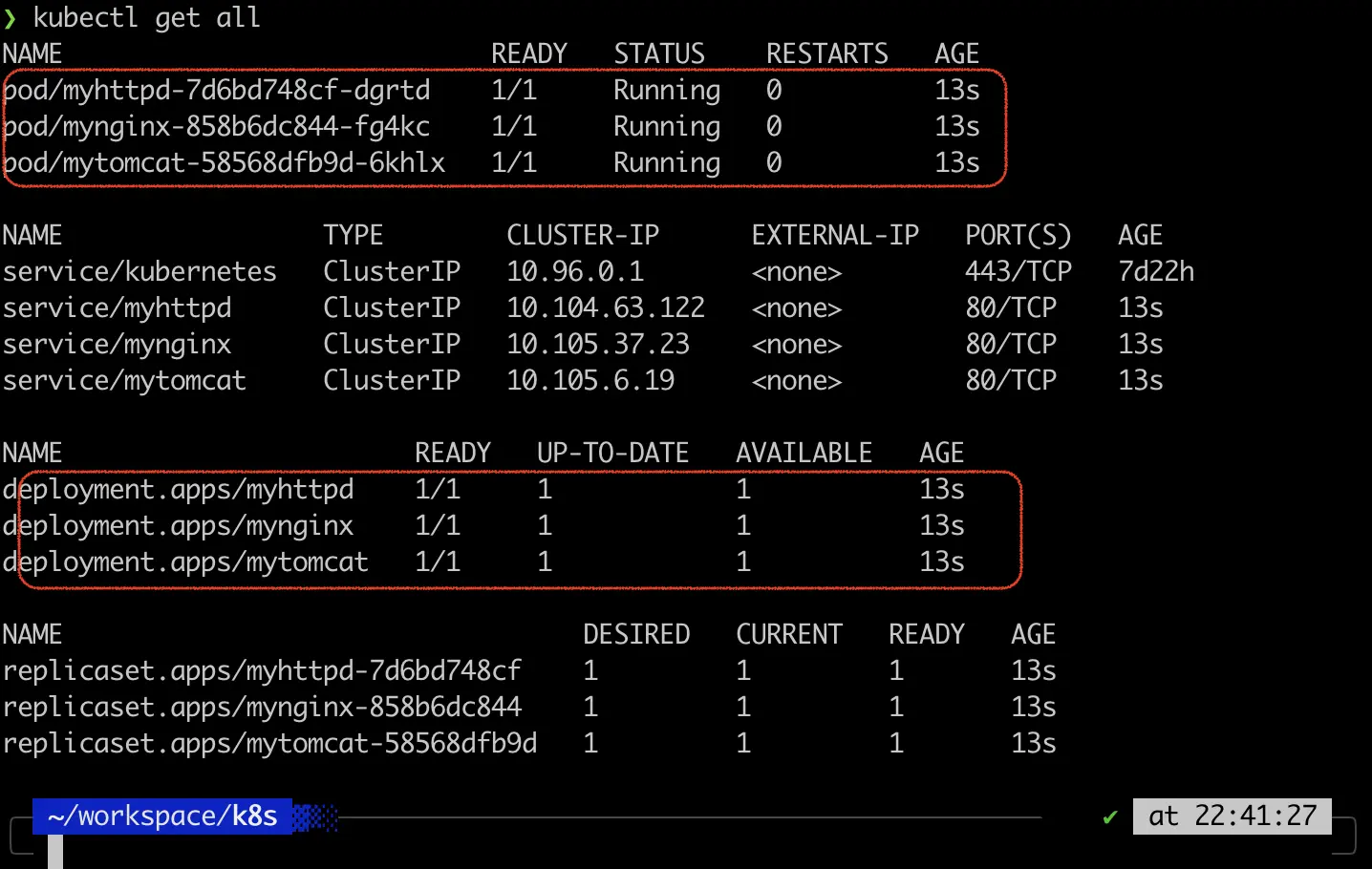

Execute the following to check on the deployments. All pods must be running and all deployments must be ready:

kubectl get all

Deploy the Ingress resources. Execute the following to deploy the Ingress resouces:

kubectl apply -f 05-ingress-resouce.yaml

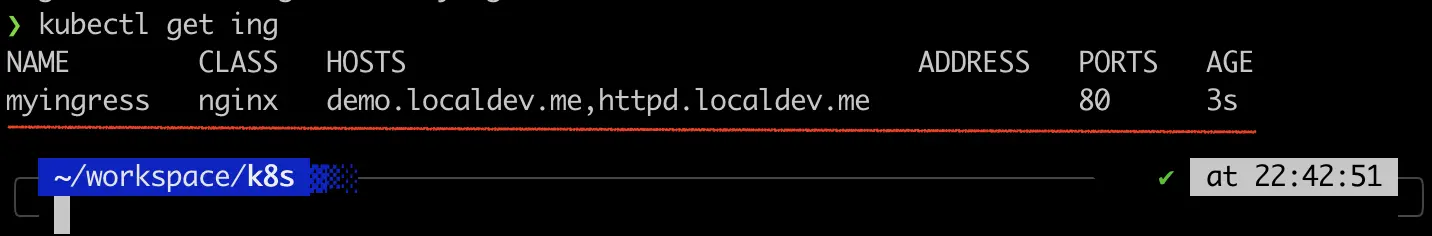

Execute the following to check on the Ingress resources:

kubectl get ing

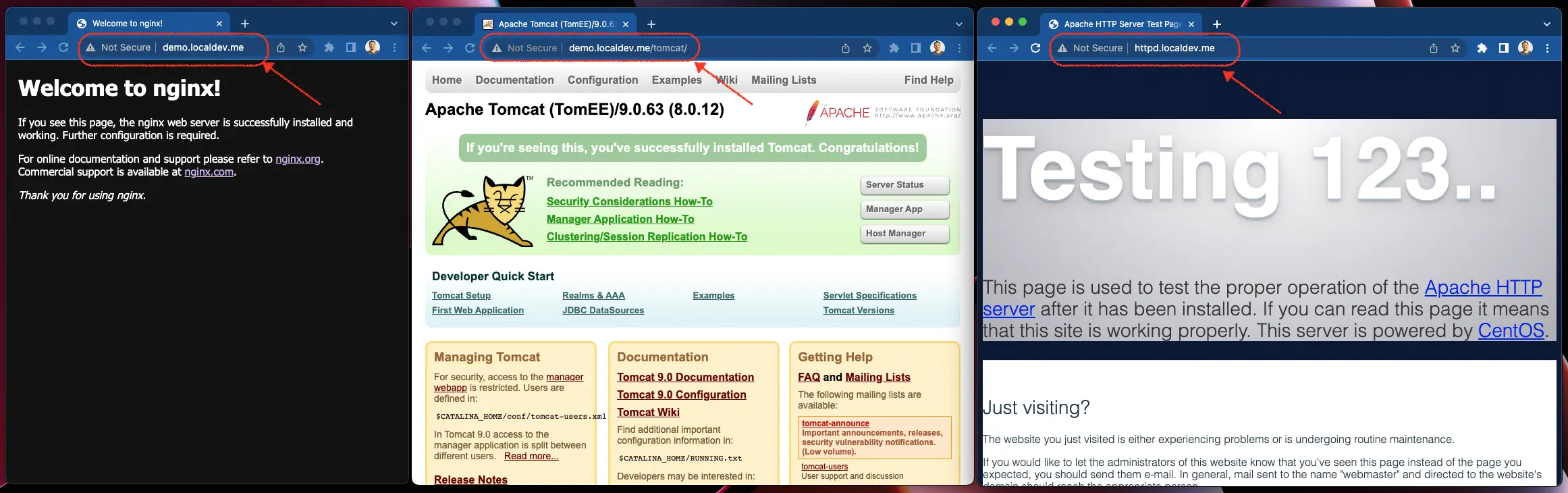

Access the following URLs in your web browser. All URLs should bring you to the intended services:

http://demo.localdev.me

http://demo.localdev.me/tomcat/

http://httpd.localdev.me

Conclusion

That’s all, folks. We have gone over the what, why, and how about the Kubernetes Ingress.

It is a powerful OSI layer 7 load balancer ready to be used with the Kubernetes cluster. There are free and open source solutions and there are also the paid ones, all listed here.

Comments