PubCon Vegas: 7 Takeaway Nuggets

I’m back at work after last week’s PubCon Vegas. I published several articles about specific sessions, but I wanted to provide some nuggets on recurring themes of the conference.

Google Caffeine Update

This year Google rolled out some changes referred to as the Google Caffeine update. This change increases the speed and size of the index, moves Google search to real-time, and improves search results relevancy and accuracy. It was a popular topic at the conference, however, not much light was shed on how algorithm changes would affect your search results, if at all. I’ll have to keep an eye on this to see if there are any significant changes in End Point’s search performance.

Bing

Bing is gaining traction. They want to get [at least] 51% of the search market share.

Social media

Social media was a hot topic at the conference. An entire track was allocated to Twitter topics on the first day of the conference. However, it still pales in comparison to search. Of all referrals on the web, search still accounts for 98% and social media referrals only account for less than 1% (view referral data here). Dr. Pete from SEOmoz nicely summarized the elephant in the room at PubCon regarding social media that it’s important to measure social media response to determine if it provides business value.

Ecommerce Advice

I asked Rob Snell, author of Starting a Yahoo Business for Dummies, for the most important advice for ecommerce SEO he could provide. He explained the importance of content development and link building to target keywords based on keyword conversion. Basically, SEO efforts shouldn’t be wasted on keywords that don’t convert well. I typically don’t have access to client keyword conversion data, but this is great advice.

Internal SEO Processes

Another recurring topic I observed at PubCon was that often internal SEO processes are a much bigger obstacle than the actual SEO work. It’s important to get the entire team on your side. Alex Bennert of Wall Street Journal discussed understanding your audience when presenting SEO. Here are some examples of appropriate topics for a given audience:

- IT Folks: sitemaps, duplicate content (parameter issues, pagination, sorting, crawl allocation, dev servers), canonical link elements, 301 redirects, intuitive link structure

- Biz Dev & Marketing Folks: syndication of content, evaluation of vendor products & integration, assessing SEO value and link equity of partner sites, microsites, leveraging multiple assets

- Content Developers: on page elements best practices, linking, anchor text best practices, keyword research, keyword trends, analytics

- Management: progress, timelines, roadmaps

On the topic of internal processes, I was entertained by the various comments expressing the developer-marketer relationship, for example:

- “Don’t ever let a developer control your URL structure.”

- “Don’t ever let a developer control your site architecture.”

- “This site looks like it was designed by a developer.”

Apparently developers are the most obvious scapegoat. Back to the point, though: It often requires more effort to get SEO understanding and support than actually explaining what needs to be done.

Search Engine Spam

Search engine spam detection is cool. During a couple of sessions with Matt Cutts, I became interested in writing code to detect search spam. For example:

- Crawling the web to detect links where the anchor text is ‘.’.

- Crawling the web to identify sites where robots.txt blocks ia_archiver.

- Crawling the web to detect pages with keyword stuffing.

I’ve typically been involved in the technical side of SEO (duplicate content, indexation, crawlability), and haven’t been involved in link building or content development, but these discussions provoked me to start looking at search spam from an engineer’s perspective.

Google Parameter Masking

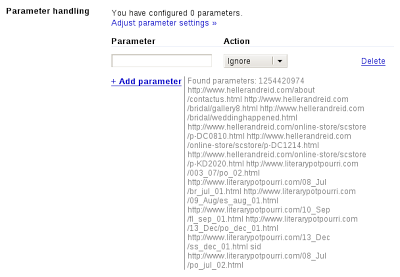

Apparently I missed the announcement of parameter masking in Google Webmaster Tools. I’ve helped battle duplicate content for several clients, and at PubCon I heard about parameter masking provided in Google Webmaster Tools. This functionality was announced in October of 2009 and allows you to provide suggestions to the crawler to ignore specific query parameters.

Parameter masking is yet another solution to managing duplicate content in addition to the rel=“canonical” tag, creative uses of robots.txt, and the nofollow tag. The ideal solution for SEO would be to build a site architecture that doesn’t require the use of any of these solutions. However, as developers we have all experienced how legacy code persists and sometimes a low effort-high return solution is the best short term option.

Comments